"Angel of Death" - Ubiquitin

Copper, Steel - 9"x9"x16"

2011

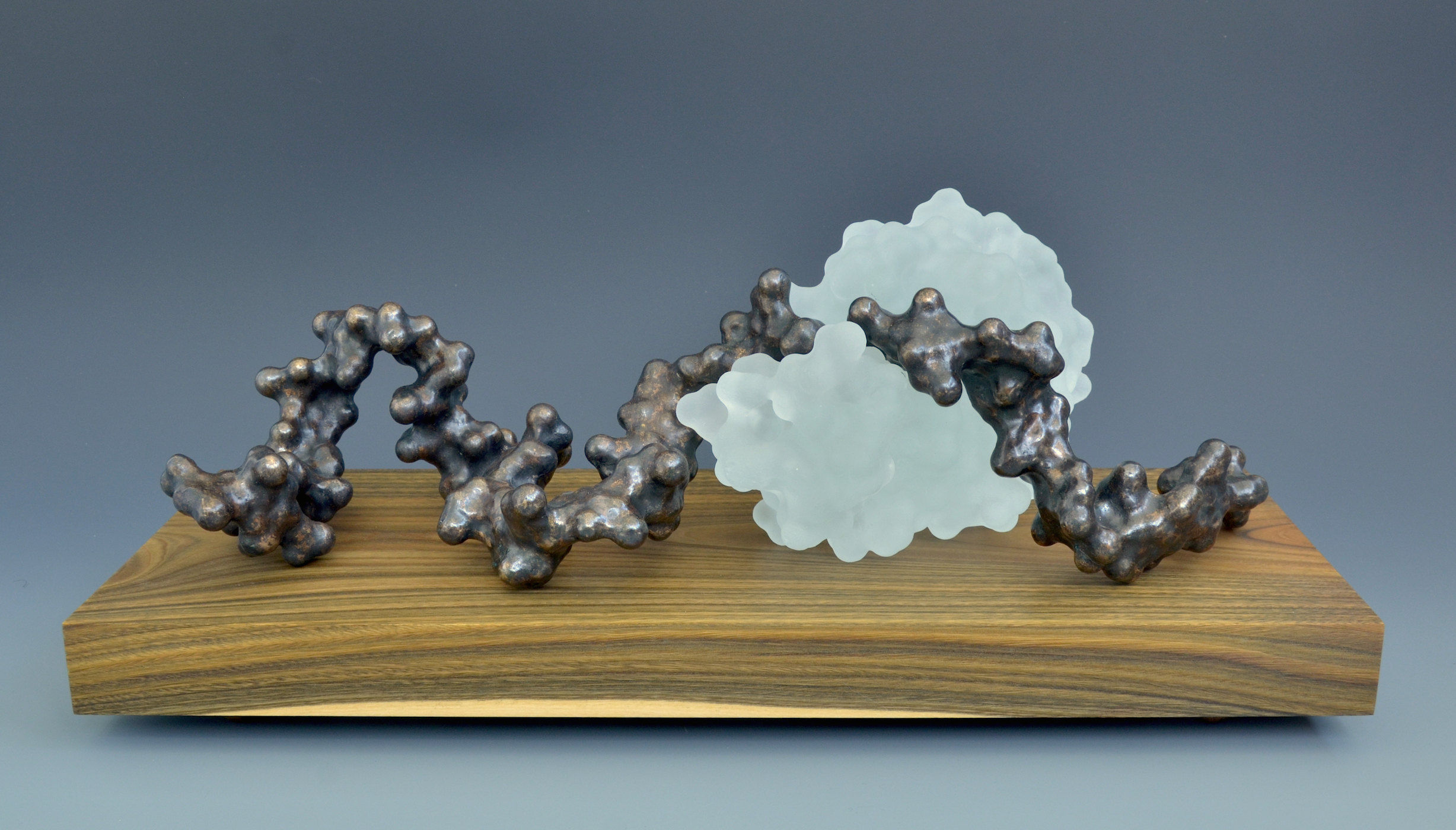

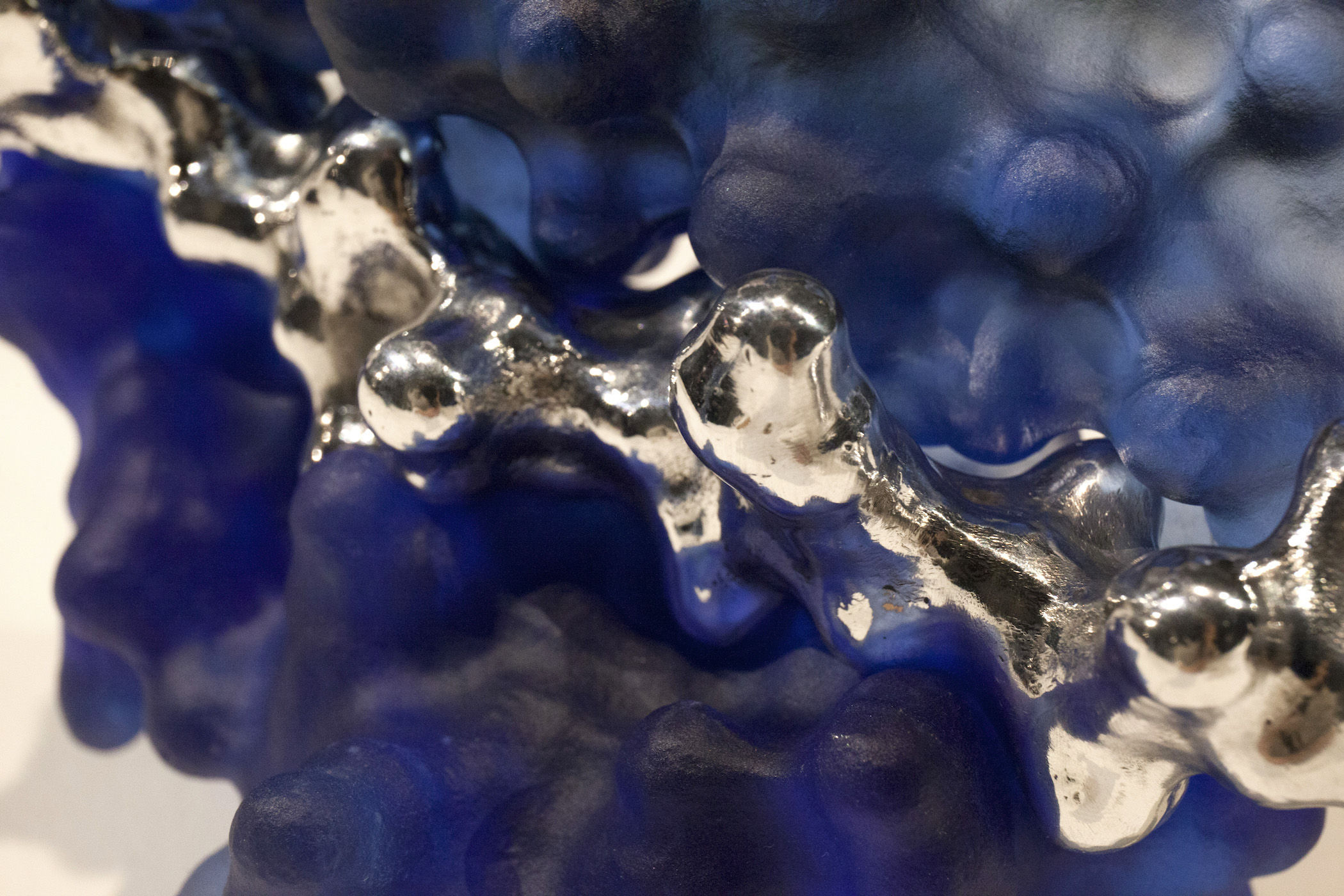

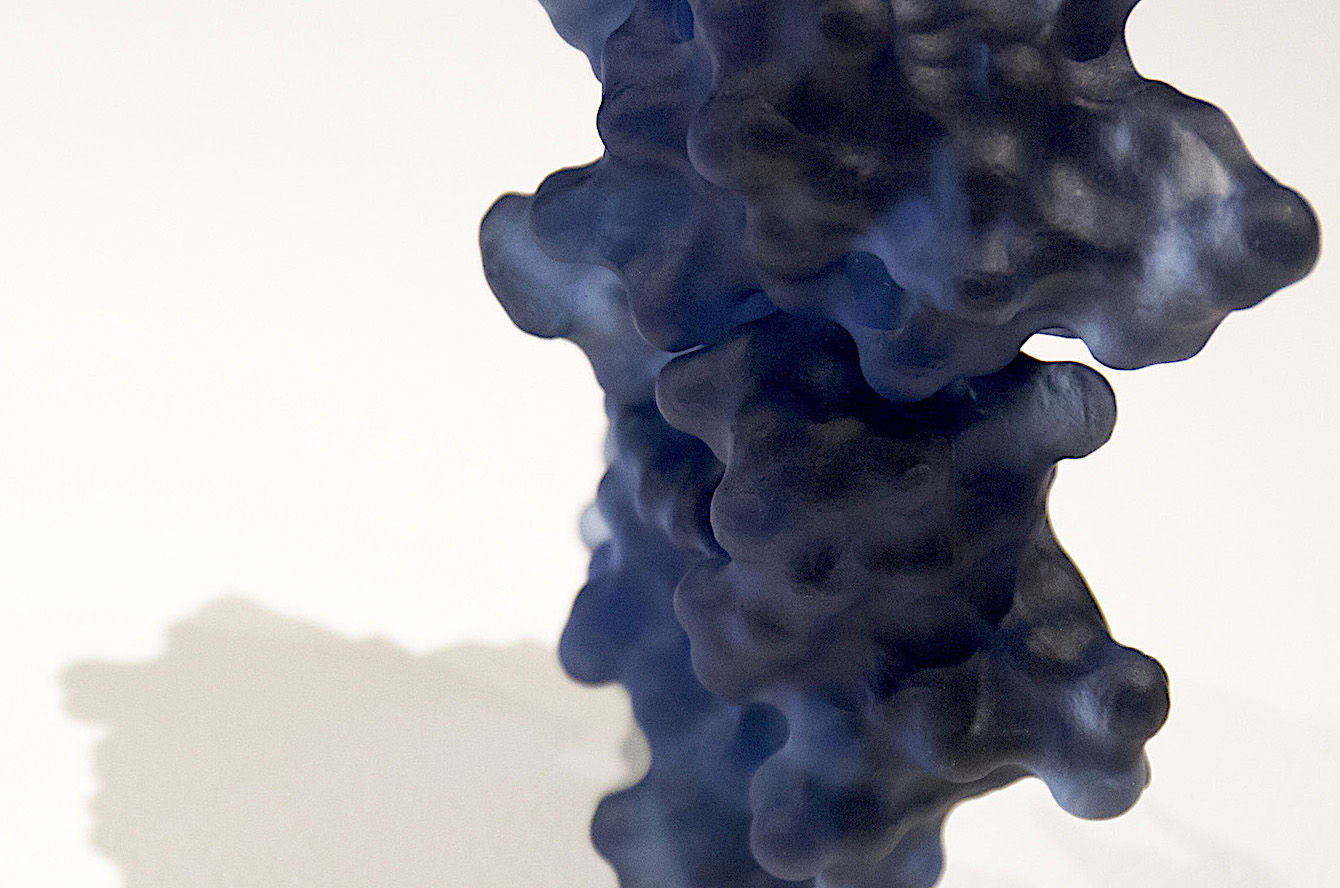

"Tears" - Lysozyme with carbohydrate

Cast bronze, Cast Glass, Wood - 20"x10"x12"

2015

![]()

Tears display antibacterial activity, a property that was discovered by Alexander Fleming around the turn of the last century. The active agent, Lysozyme, is also found in saliva, nasal mucus and even breast milk and constitutes a major element in the body's innate immune defense.

Lysozyme was also one of the first proteins and the very first enzyme to have its three dimensional structure elucidated in the 60s through painstaking X-ray crystallography work by David Phillips. The coordinates obtained were used here to create the initial 3D model of the protein which was then 3D printed in plastic, post processed and then recast in clear lead glass using direct lost-PLA casting.

Many bacteria implicated in human disease have a protective cell wall. Lysozyme is an enzyme which digests these carbohydrate barriers, thereby significantly weakening the potential intruders. The carbohydrate, part of which is cast here in bronze, fits tightly into the active site cleft of the Lysozyme enzyme. Deep inside that cleft the cleavage reaction takes place, catalysed by the protein. After cleavage Lysozyme releases both parts and moves on to a new cleavage site, gradually breaking down the cell wall. Discovery of the atom structure led to the first detailed enzymatic mechanism to be described and was a major breakthrough in our understanding of how our bodies' metabolic functions.

"Portal" - Bacterial Porin

Copper, Steel, Wenge Wood - 12"x12"x24"

2012

The boundary of cellular life, which delineates the living chemistry from its surroundings, was among the most important fundamental inventions of evolution eons ago. Protein channels span these molecular castle walls and regulate the diffusional traffic of molecules trying to enter or leave the cell. One class of these molecular gatekeepers are the Porins, beta-barrel proteins that are situated in the outer membranes of cells or organelles such as human mitochondria. The Porin channel is partially blocked by a loop, called the eyelet, which projects into the cavity and defines the size of solute that can traverse the channel. Porins can be chemically selective, they can transport only one group of molecules, or may be specific to one molecule. For example, for antibiotics to be effective against a bacterium, it must often pass through an outer membrane Porin. Bacteria can develop resistance to the antibiotic by mutating the gene that encodes the Porin , the antibiotic is then excluded from passing through the outer membrane. The scale used in this sculpture is 2.5 inch/nm (a magnification factor of 63 million). At this magnification a grain of rice would roughly span the distance between Seattle and Portland.

The Annealing - DNA

Cast glass, Cast bronze, Vera wood - 16"x9"x11"

2016

Two short complementary DNA strands have found each other and are annealing together to form one double-stranded DNA molecule. The ability of DNA to stick to itself in an extremely specific manner, like a programmable velcro, has been of great interest to research interested in developing nanomaterials. A huge variety of shapes, lattices, even small molecular machines can be constructed using DNA - the amazing thing is that the components can simply all be combined in solution and the structure builds itself. This has no equivalent in our macroscopic world. Throwing the parts of a kid's puzzle into a tumble dryer would hardly result in the puzzle being solved. Yet at the microscopic scale the rules are different. Proteins exhibit a similar ability to fold themselves and assemble into larger complexes. This sort of self assembly is at the base of life itself; its parts constantly self assemble into working nanomachines which catalyse and manipulate other parts of living cells.

"Top7" - Novel synthetic fold

Copper, Steel, Gold plating - 24"x14"x14"

2022

![]()

Top7 was the first denovo-designed protein with a fold not seen in nature. This piece was created as a commission

for the Institute for Protein Design (IPD) on their 10th anniversary as a special gift to David Baker (my former PostDoc advisor). This sculpture comprises two representation of the protein Top7: one as a solvent accessible surface and one as a ribbon diagram. The half mirror allows the viewer to appreciate both representations superimposed one one another.

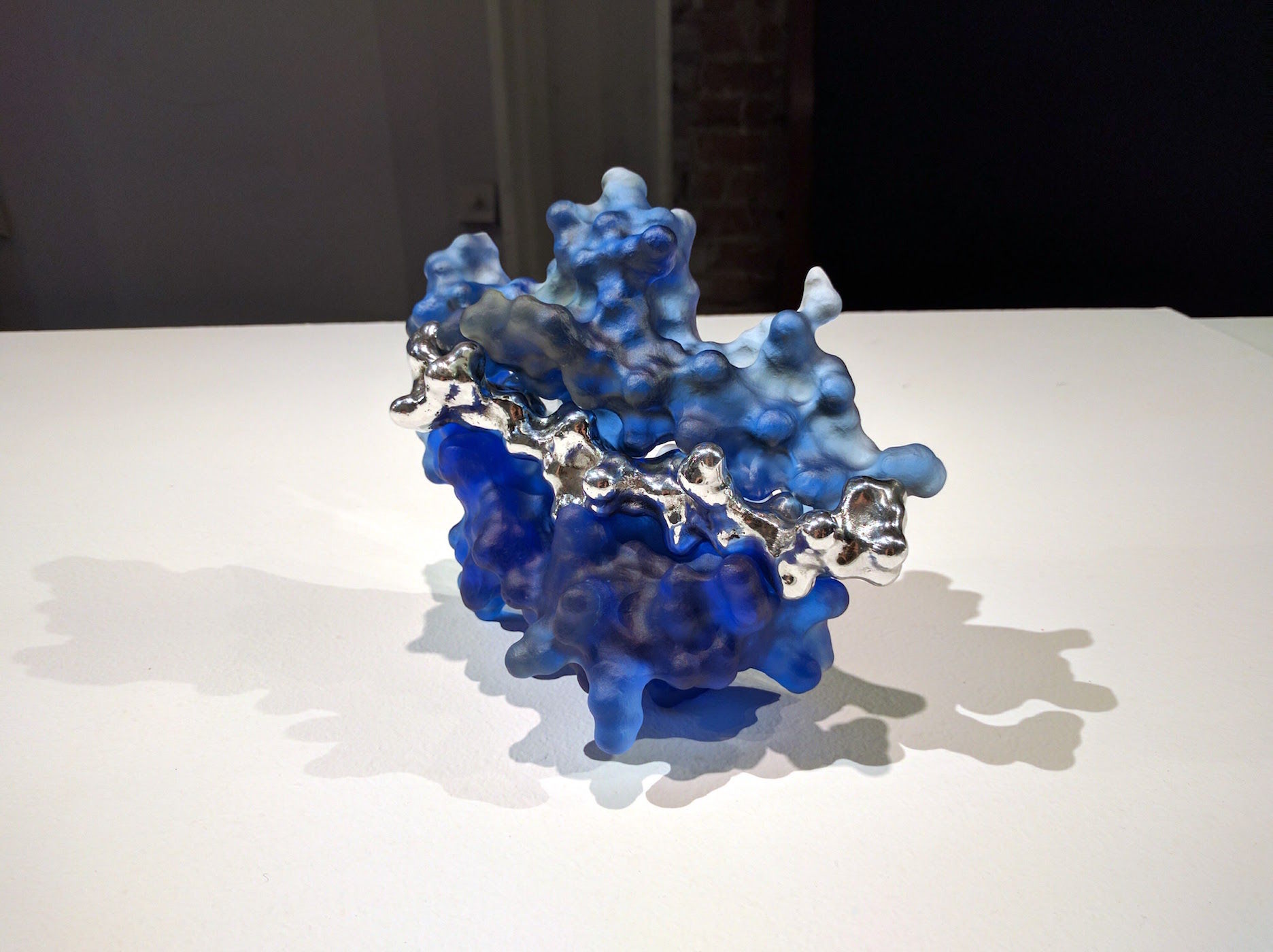

"Synaptic Kiss" - MHC and TCR

Cast glass, Cast aluminum

2016

One of the most important parts of the human immune system is the interaction between the Major Histocompatibility Complex (MHC) and the T-Cell receptor.

The MHC is found on the surface of normal cells and special antigen presenting cells. The MHC presents on its surface small peptides which are small digested bits of proteins. The T-Cell scans these presented fragments using its receptor looking for any fragment that might be foreign. If an unauthorized sequence is detected it indicates that an intruding organism has entered the body and an immune response is mounted against any other cells showing the same signature. This is particularly important in fighting viral infection. Since viruses do not have their own metabolism they are difficult tokill. Instead their replication cycle is interrupted by circulating T-cells actively killing human host cells which are infected (and will betray their infection using the MHC-TCR mechanism).

There is a tight lock-and-key fit between MHC and TCR - a delicate and intimate interaction between two molecules whose surfaces are highly complementary. The peptide itself is cast in aluminum while the two partners, each composed from two separate subunits, are cast in glass.

"Savior" - IgG

Copper, Steel, Gold/Chrome plating - 56"x50"x18"

2013

![]()

The machinery of life, an inevitably complex system, must constantly defend itself from intrusion and subversion by other agents inhabiting the biosphere. Ever more intricate systems for the detection and thwarting of intruding foreign life forms have evolved over the eons, culminating in adaptive immunity with one of its centerpieces: The Antibody. Also known as Immunoglobulin, this pronged, Y-shaped protein structure is capable of binding, blocking and neutralizing foreign objects such as as bacteria or viruses.

The two tips of the Y have special patches which can tightly recognize and bind a target. Our body generates astronomical numbers of variants, each recognizing a different shape. The variety is so great that completely alien molecules can be recognized even though the body has never encountered them before. Once bound, the antibody blocks the function of the foreign object by physically occluding its functional parts. The Antibody sacrifices itself in the process but not before signalling to the immune system to make more of its specific variant form. After the intruder in question has been fought off, memory cells remain in the bloodstream that can quickly be reactivated should reinfection occur to produce more of the successful variant Antibody.

DNA Study 1

Cast lead glass, Steel- 9"x7"x4"

2015

![]()

DNA, or deoxyribonucleic acid, is a two-stranded helical polymer which is used by virtually all known forms of life to encode genetic information. It is composed of repeating units called nucleotides of which there are four variants: Adenosine (A), Thymine (T), Cytosine (C) and Guanine (G). These are flat molecules which stack on top of each other like a set of double stairs, held together by a sugar-phosphate backbone which runs on the outside. This arrangement was famously discovered through work by Francis Crick, Rosalind Franklin, and James Watson. Critically, every A is paired with a T on the opposite strand and every G is paired with C. This means that each strand contains all the information needed to recreate the other, thus immediately suggesting a straightforward way in which the information could be copied. Indeed, during cell replication the strands separate and complex protein machinery rebuilds the opposite strand for each of the original single strands. Natural errors during this process lead to genetic drift and are a major component of the evolutionary forces that lead from the early simple organisms many billion years ago to the highly sophisticated ones found today.

Shown here is a short section of double-stranded DNA (about 11 nucleotide units) which would be only 3.6 nanometers long in reality.

KcsA Potassium Channel

Copper, Steel - 14"x14"x20"

2011

Potassium channels form potassium-selective pores that span cell membranes. They are the most widely distributed type of ion channel found in virtually all living organisms. The four identical subunits are situated in a four-fold symmetrical manner around a central pore, which allows potassium ions to pass freely. At the top of the structure, formed by four loops lining the pore, a selectivity filter is situated which prevents other ions (such as sodium ions) from passing. The correct ions are detected by their size and charge. Note that that no active pumping of ions occurs; it merely allows passive conductance of ions down the con-centration gradient between the two sides of the membrane.

The KcsA is an archetypal membrane protein with eight tightly packed membrane-spanning a-helices. The four short helices in the center where the chain crosses half the membrane and then returns to the top are a more unusual feature.

"Prometheus"

Copper, Steel, Walnut, Gold - 10"x6"x6"

2014 - Series of 3, private collection

It is a brave new world, moving from our mechanical world, born in the industrial revolution, to the biotechnological future.

The gears of life are complex, encoded polymers, nano machines that create order out of disorder and harness the free energy of the sun to perpetuate the information they carry in to the unknown. Now, man begins to alter that very microscopic machinery that constitutes his existence, that gives rise to his

consciousness out of inorganic matter, ever driven forward by his curiosity and desire to manipulate his surroundings and himself.

White-Chen catalyst

Cast lead glass, Steel, Magnets - 14"x12"x12"

2015, private collection

_med.jpg)

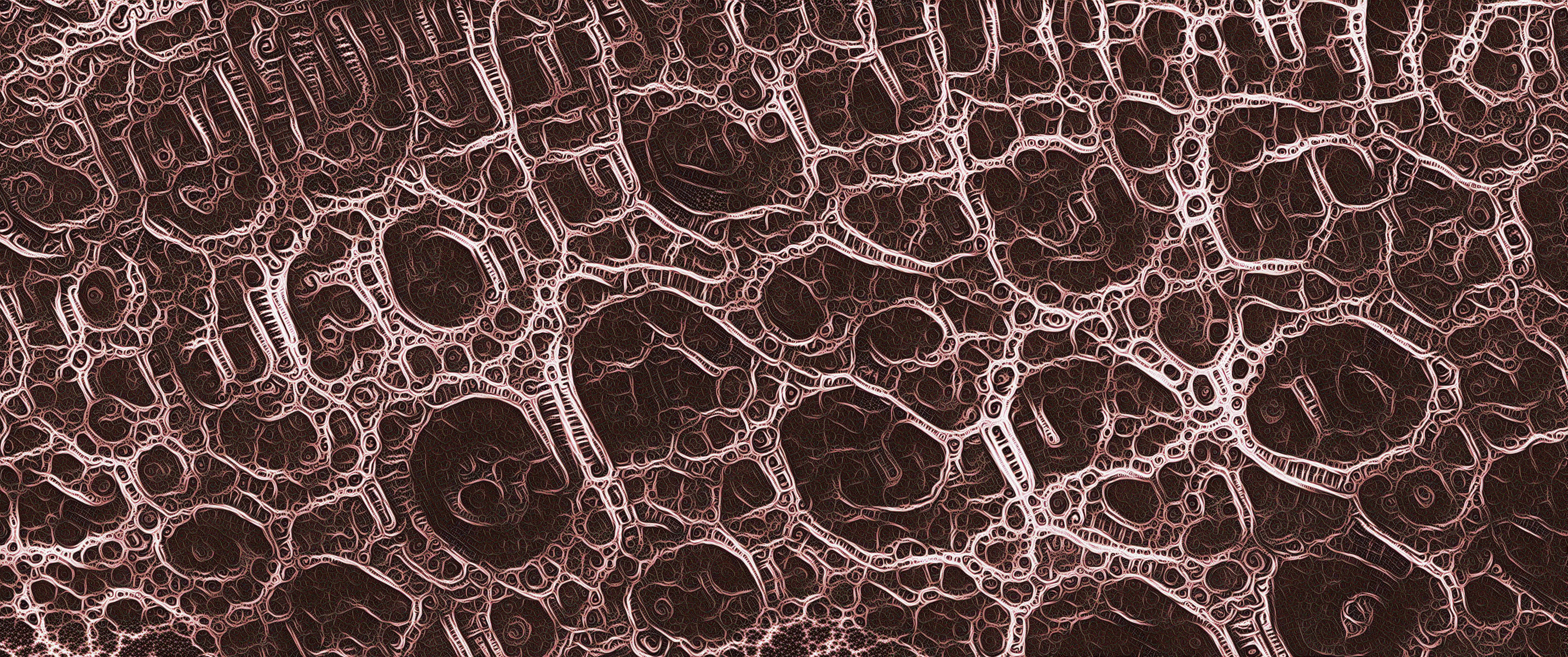

Die Ankunft

Neural net, NFT, Edition size: 1/1

Artists' proof, 36x66" print, Private Collection

NFT 1/1

2016

_med.jpg)

Birds II

Neural net, NFT, Edition size: 1/1

Artist's Proof, 36x60" print, Private Collection"

NFT 1/1

2016

_med.jpg)

Redshift

Neural net, NFT, Edition Size 1/1

Artist's Proof 36x60" print, Private Collection

2016

_med.jpg)

Surrender

Neural net, NFT, Edition size: 1/1

Artist's Proof, 36x60" print, Private Collection

NFT 1/1

2017

_med.jpg)

The Portal

Neural net, NFT, Edition size: 1/1

2016

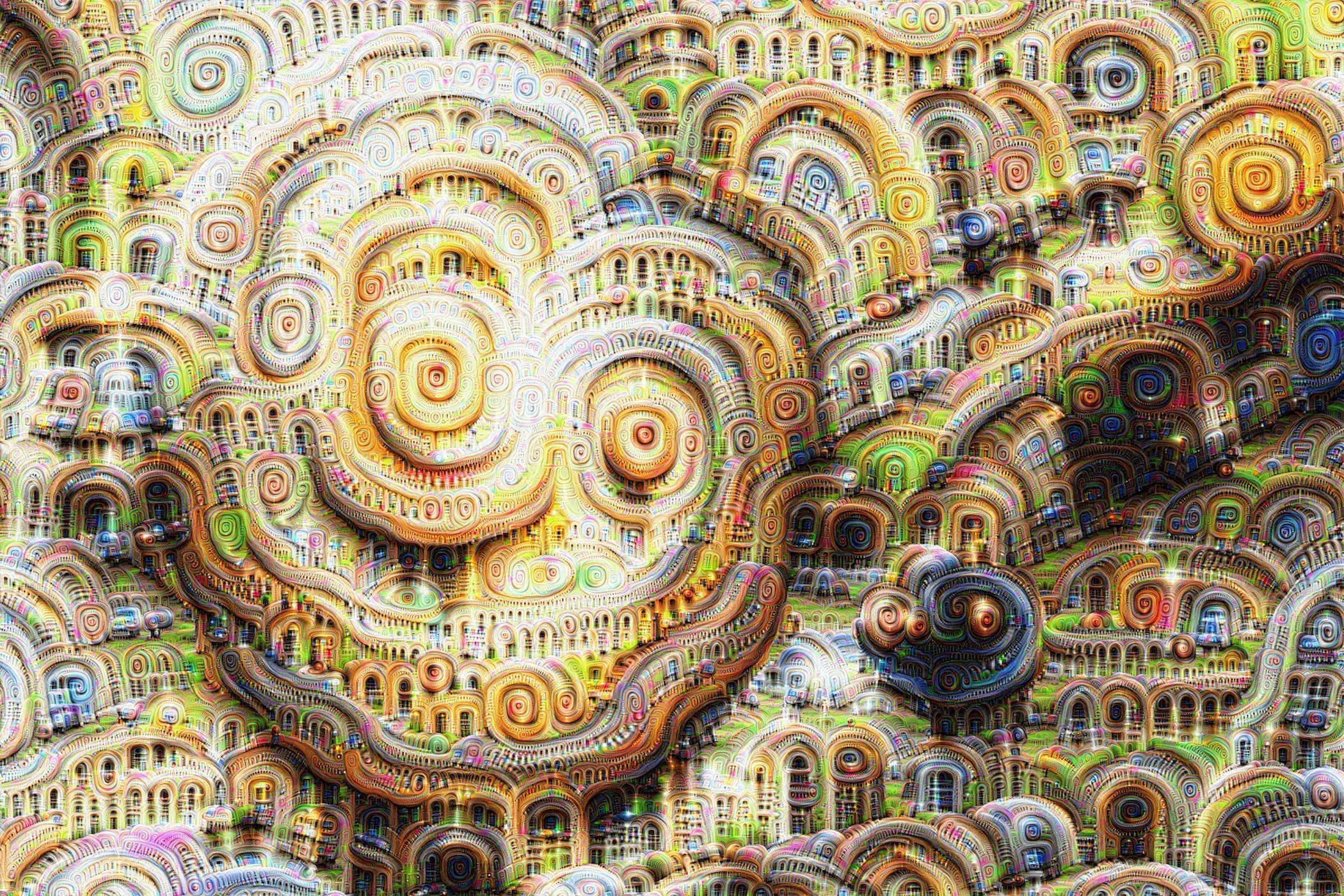

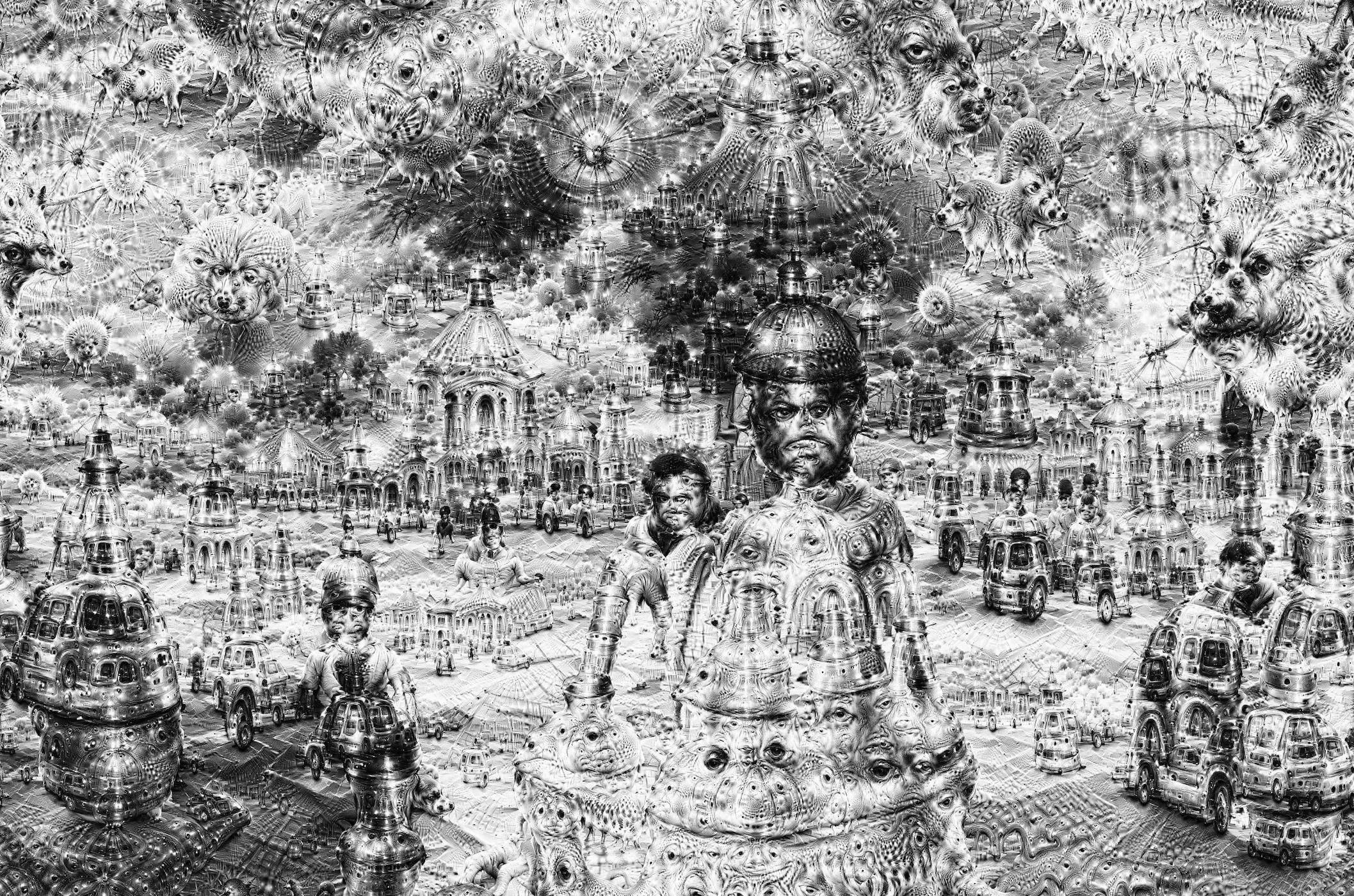

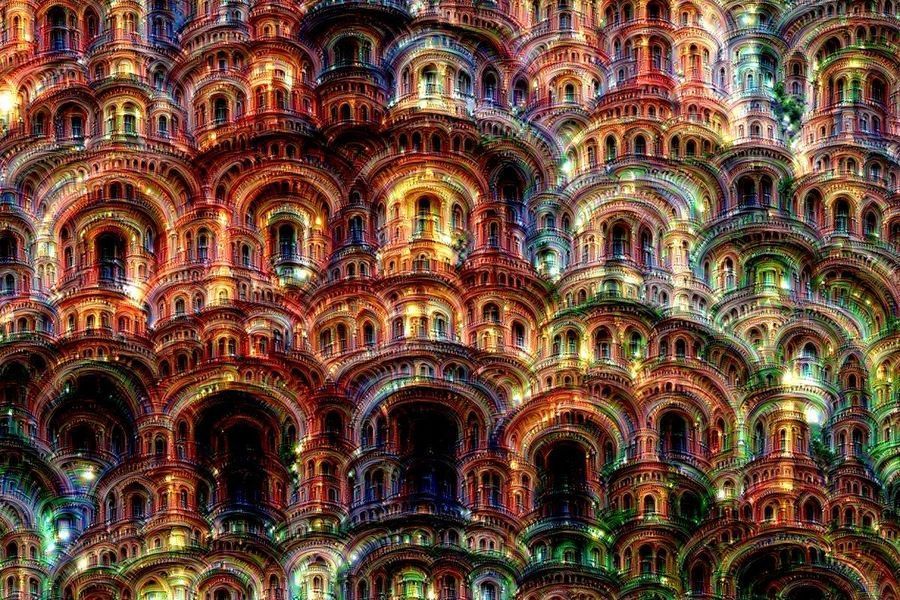

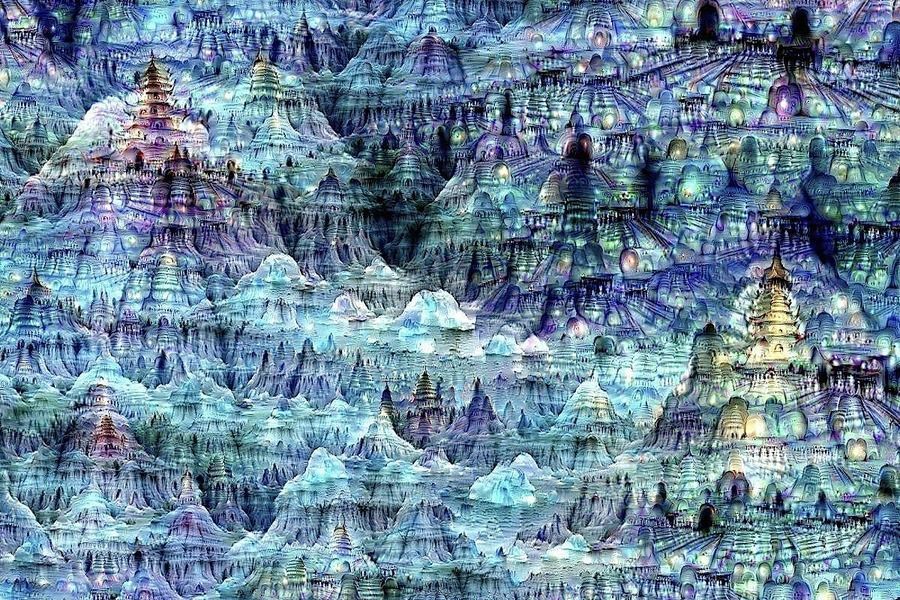

Castles In The Sky With Diamonds

Neural net, Archival print, 60"x48", SOLD

2016

How We End Up At The End Of Life

Neural net, Archival print, 60"x48", SOLD

2016

Title generated by LSTM by Ross Goodwin.

Ground still state of God’s original brigade

Neural net, Archival print, 60"x48", SOLD

2016

Title generated by LSTM by Ross Goodwin.

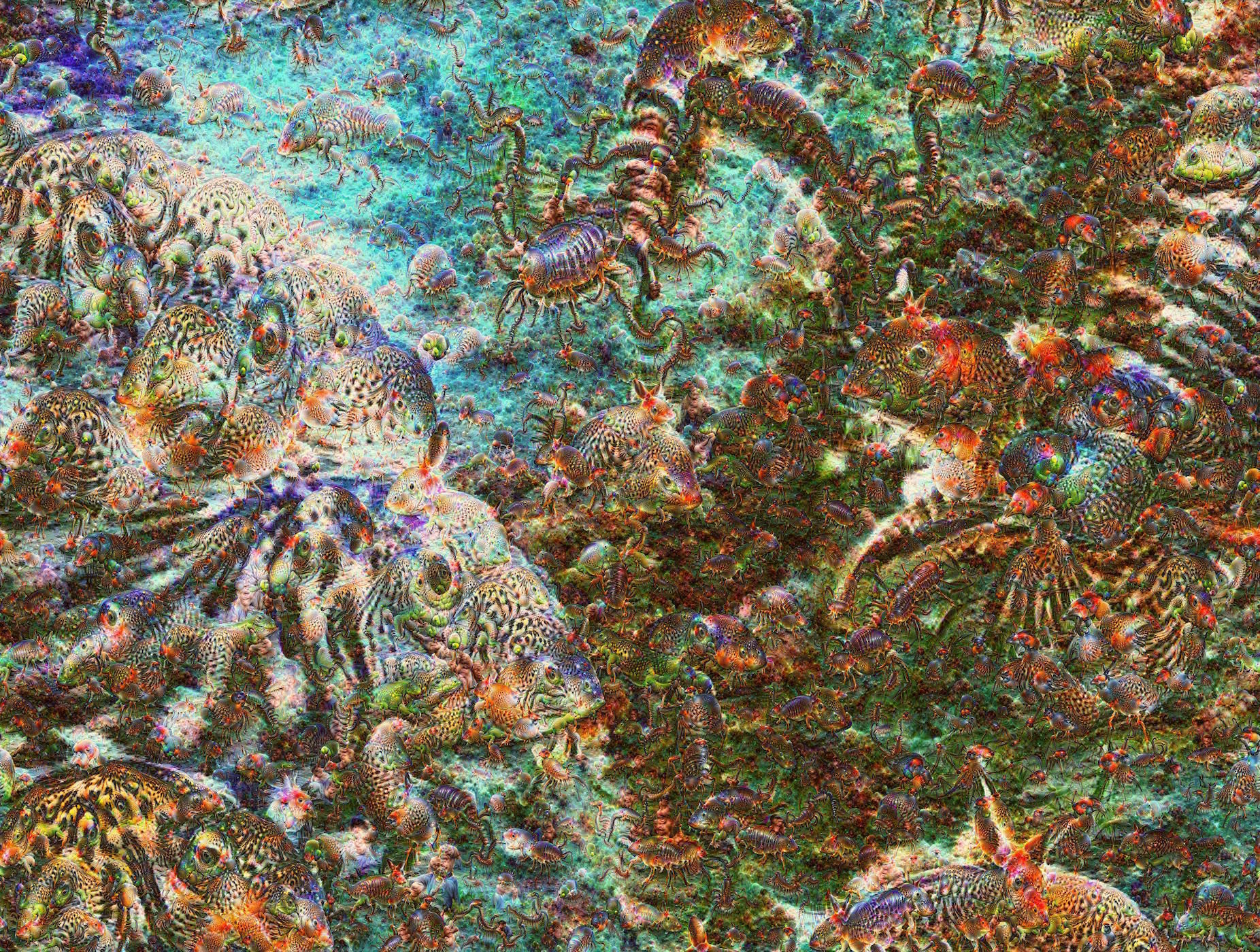

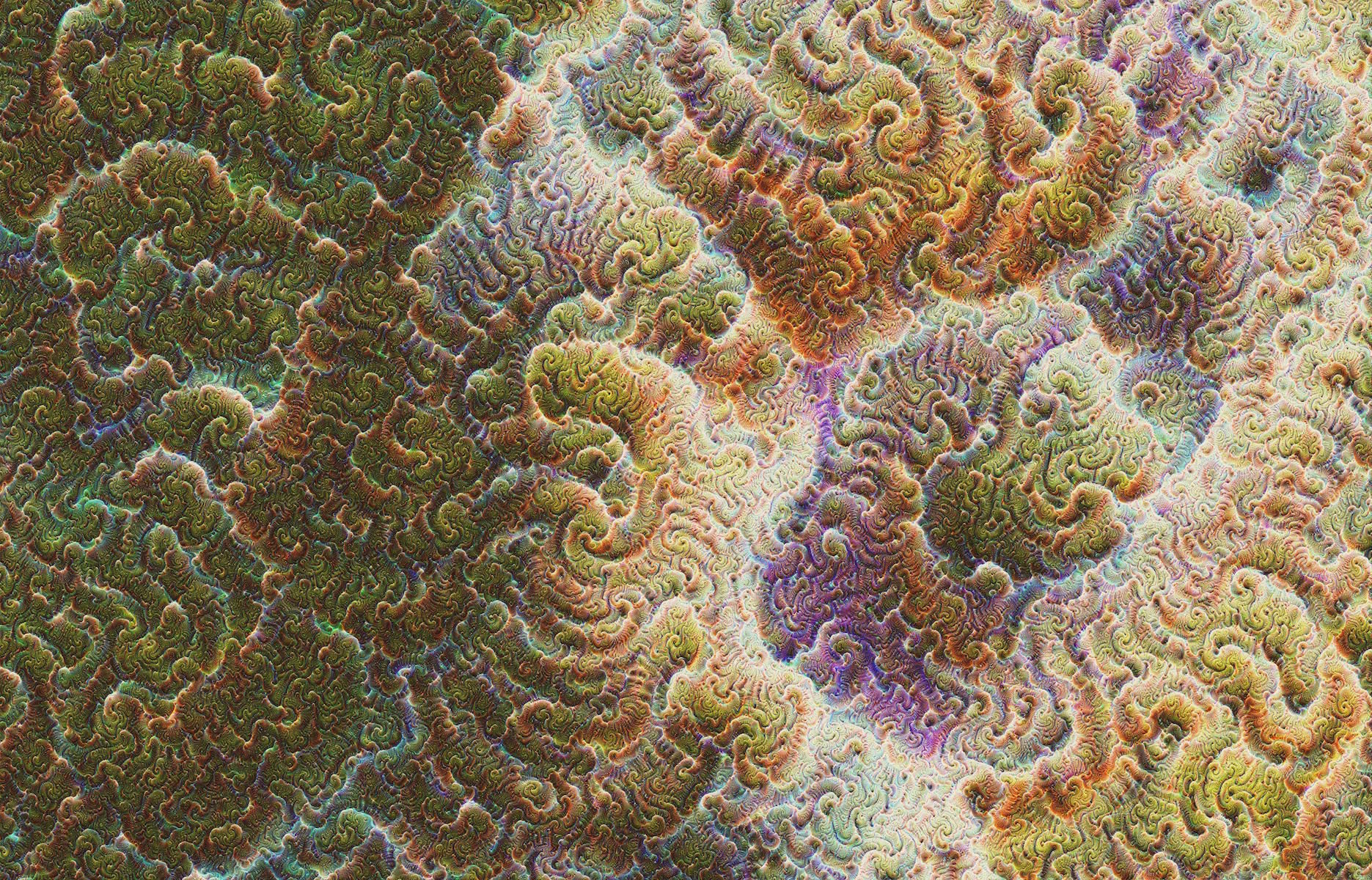

Carboniferous Fantasy

Neural net, Archival print, 60"x48", SOLD

2016

Jurogumo

Neural net, Archival print, 38"x31", SOLD

2016

Mukade

Neural net, Archival print, 38"x31", SOLD

2016

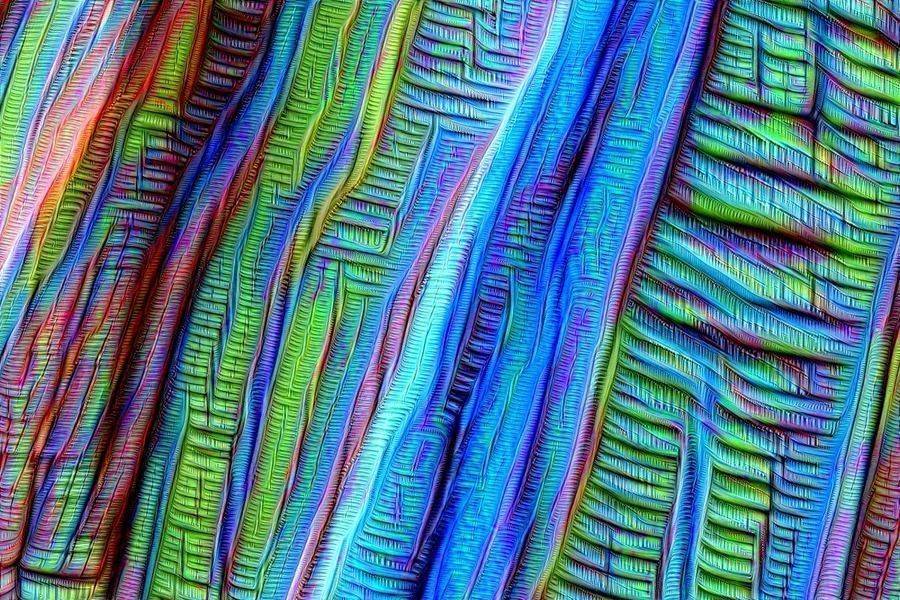

Fabric Of Mind

Neural net, Archival print 100"x48", SOLD

2016

The Babylon Of The Blue Sun

Neural net, Archival print, 66"x50", SOLD

2016

Title generated by LSTM by Ross Goodwin.

The Fall

Neural net, Archival print, 71"x52", SOLD

2016

Title generated by LSTM by Ross Goodwin.

Here Was The Final Blind Hour

Neural net, Archival print, 74"x52", SOLD

2016

Title generated by LSTM by Ross Goodwin.

Himalayas

Neural net, Lasercut wood, 30"x24"

2016

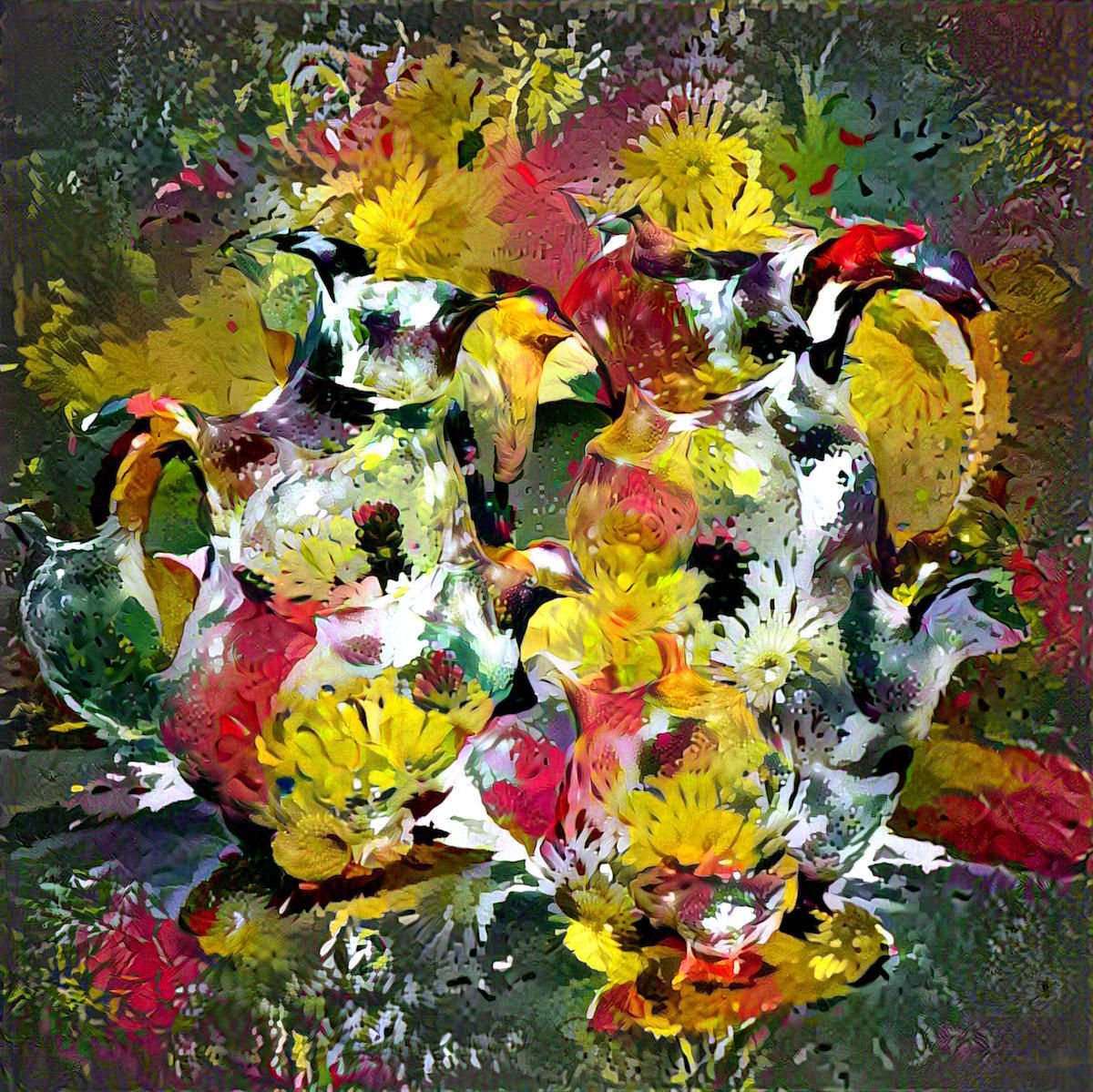

Bacchus

Neural net, Archival print, 11.75"x11.75" SOLD

2016

This piece is the result of two concepts an artificial neural network has learned about expressed simultaneously: Pitchers and Flowers. Together the form a blended new whole, though the combination has occured not in the space of pixels but in the semantic or latent space that is created beed inside the neural network.

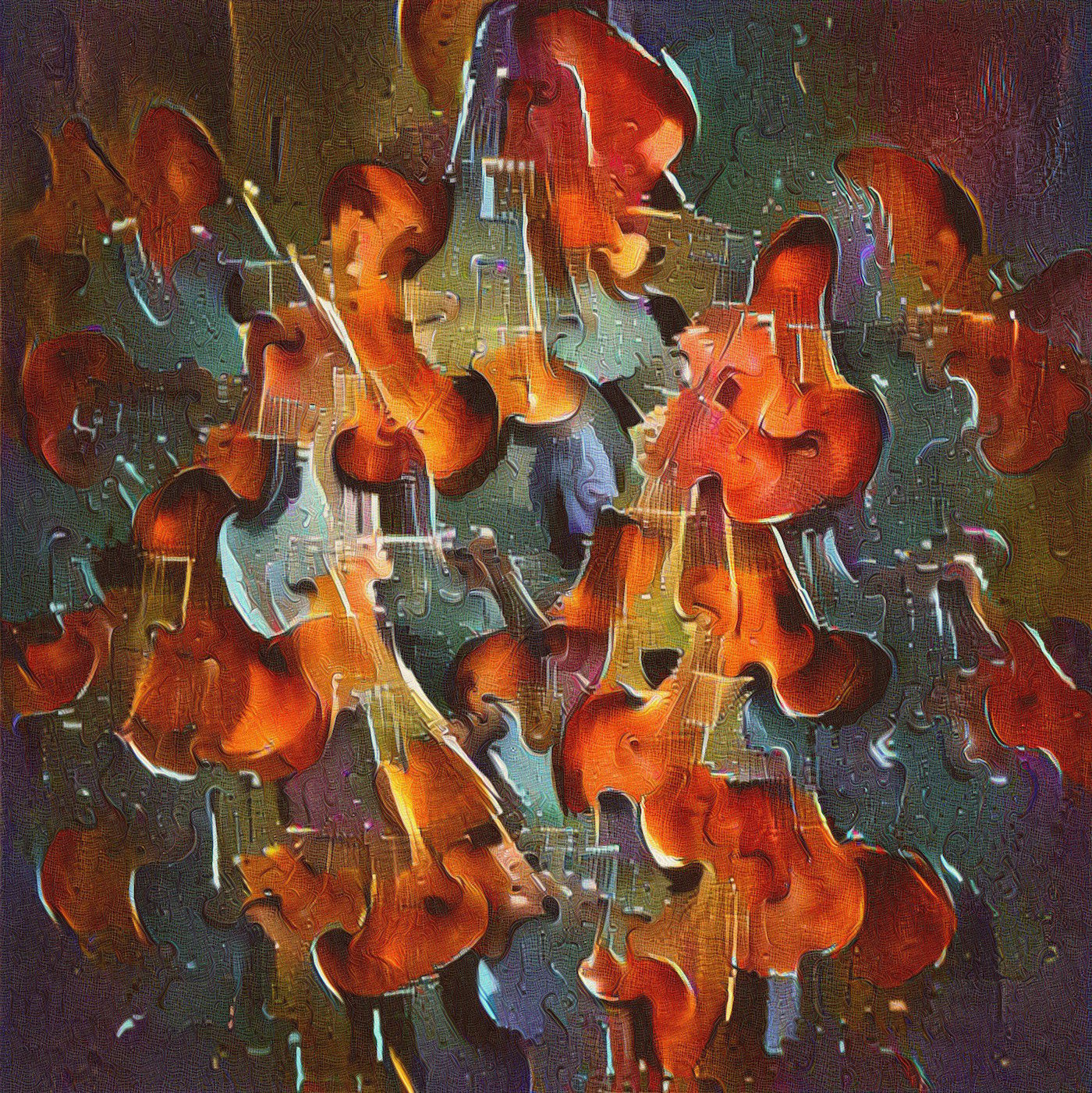

Style Is Violins

Neural net, Archival print, 21"x21", SOLD

2016

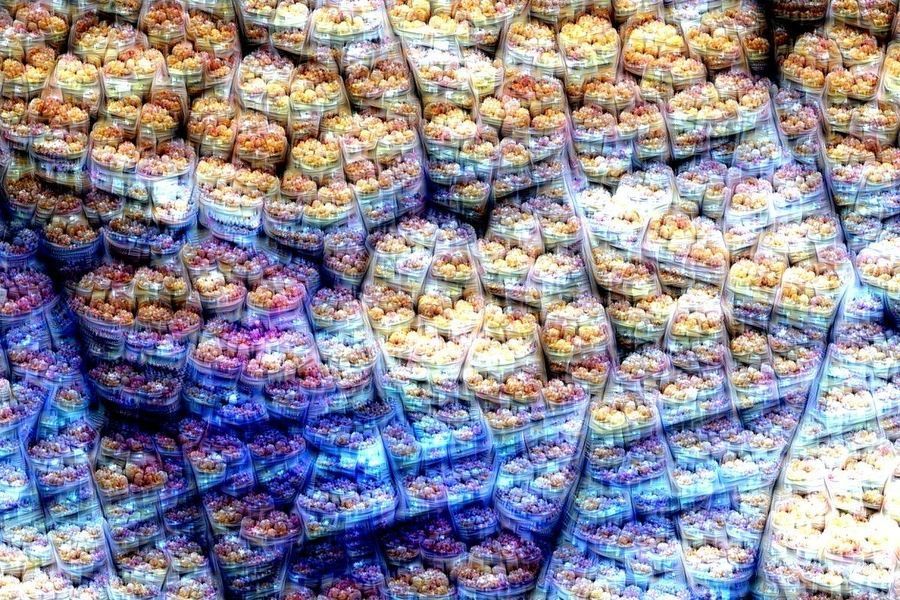

Cellism

Neural net, Archival print, 21"x21", SOLD

2016

Saxophone Dreams

Neural net, Archival print, 21"x21", SOLD

2016

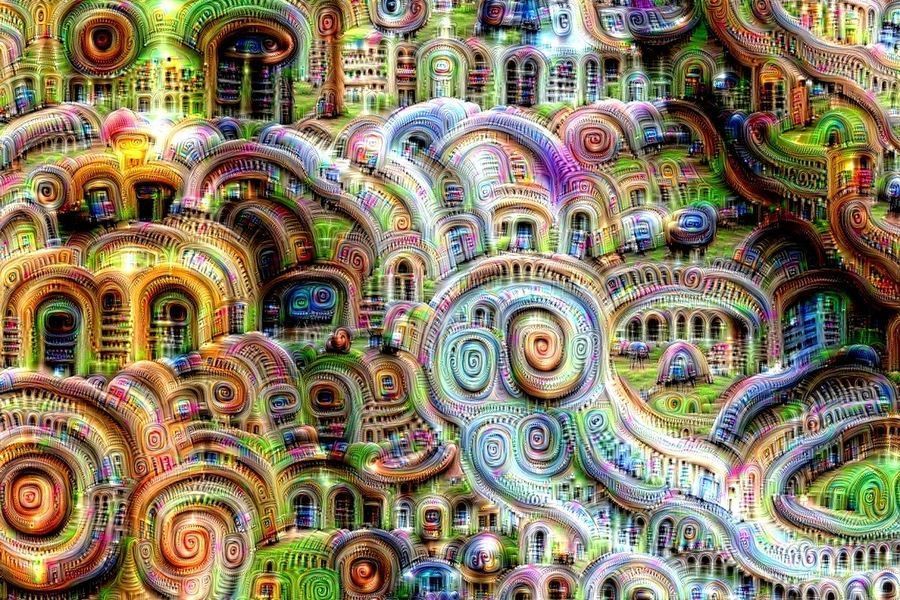

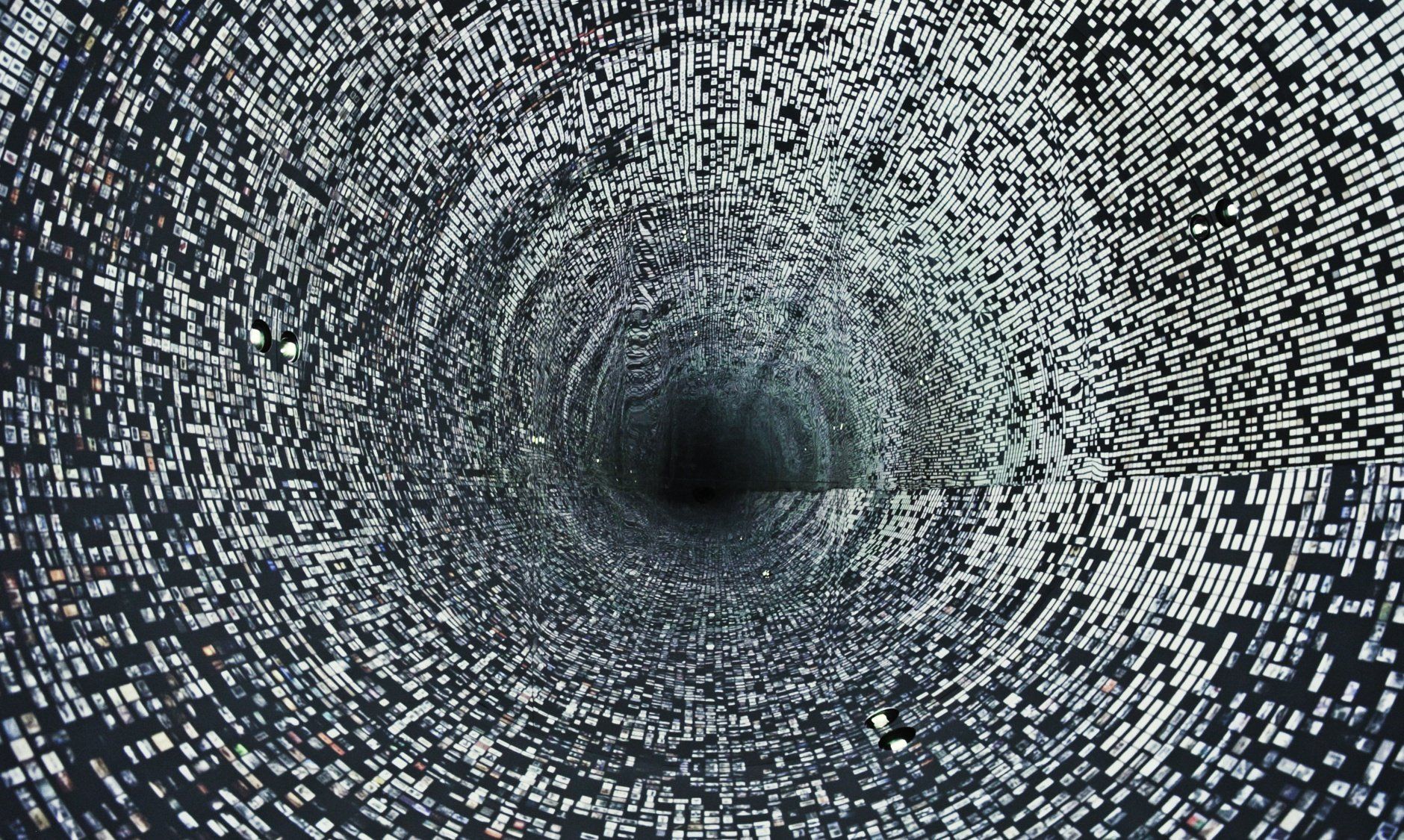

Inceptionism: Cities

Neural net, digital (NOT AVAILABLE)

2015

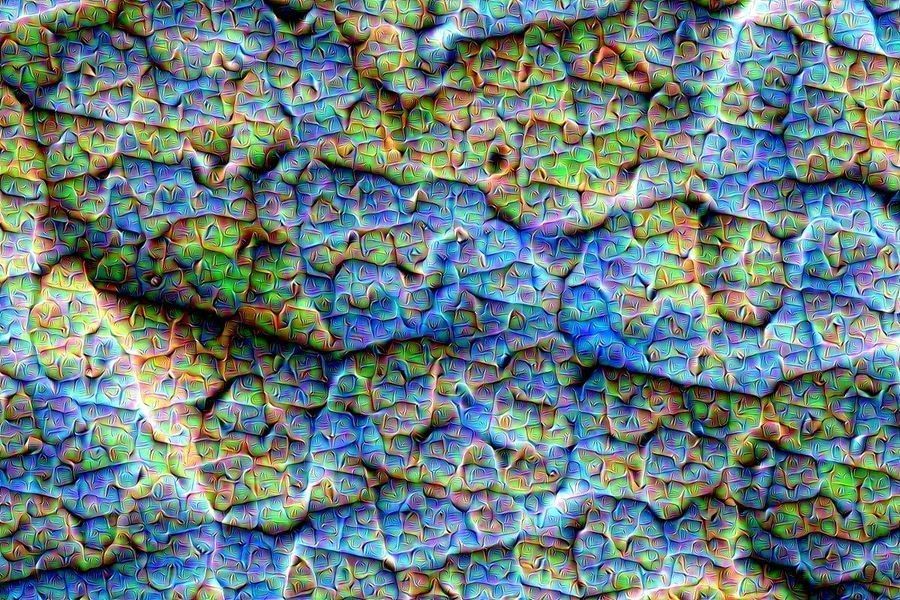

Inceptionism: Patterns

Neural net, digital, chance, (NOT AVAILABLE)

2015

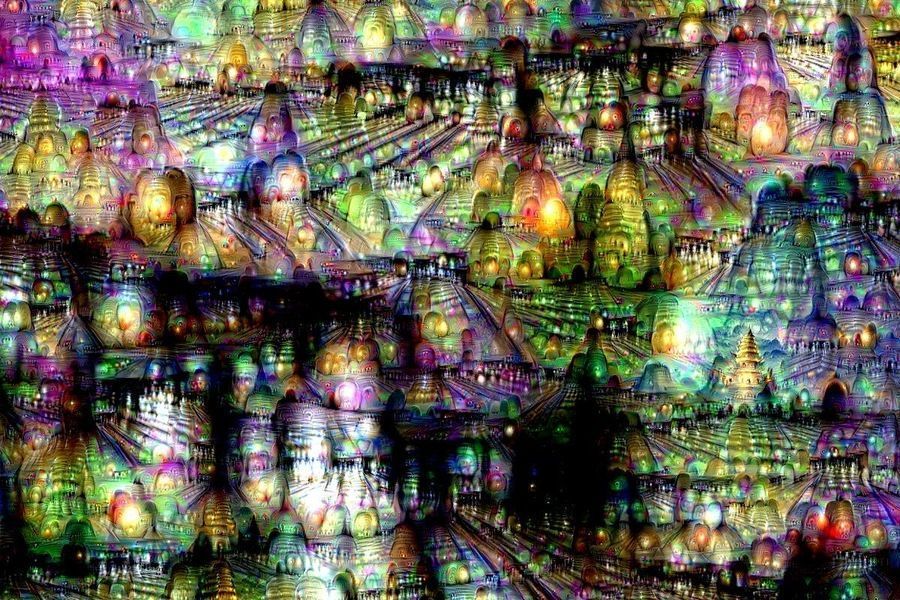

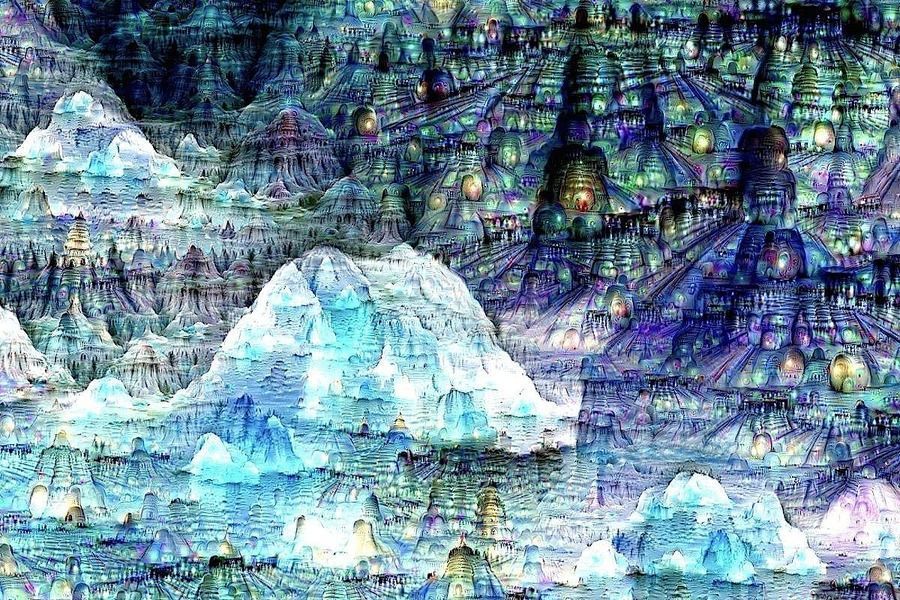

Inceptionism: Landscapes

Neural net, digital, chance, (NOT AVAILABLE)

2015

EONS

Neural Net Animation

MP4, 3840x2160, 2019

EONS is a short animation, a moving painting, a music video, a GANorama. An experiment in creating a narrative arc using neural networks. EONS was created entirely using artificial neural nets: The Generative Adversarial Net BigGAN (Andrew Brock et al.) was used to create the visuals, while the Music was composed by Music Transformer (Anna Huang et al.).

The work aims to remind us of our short and myopic existence on this planet, our relationship with nature at geological timescales, which, despite all our accumulated scientific knowledge, remain emotionally incomprehensible to us.

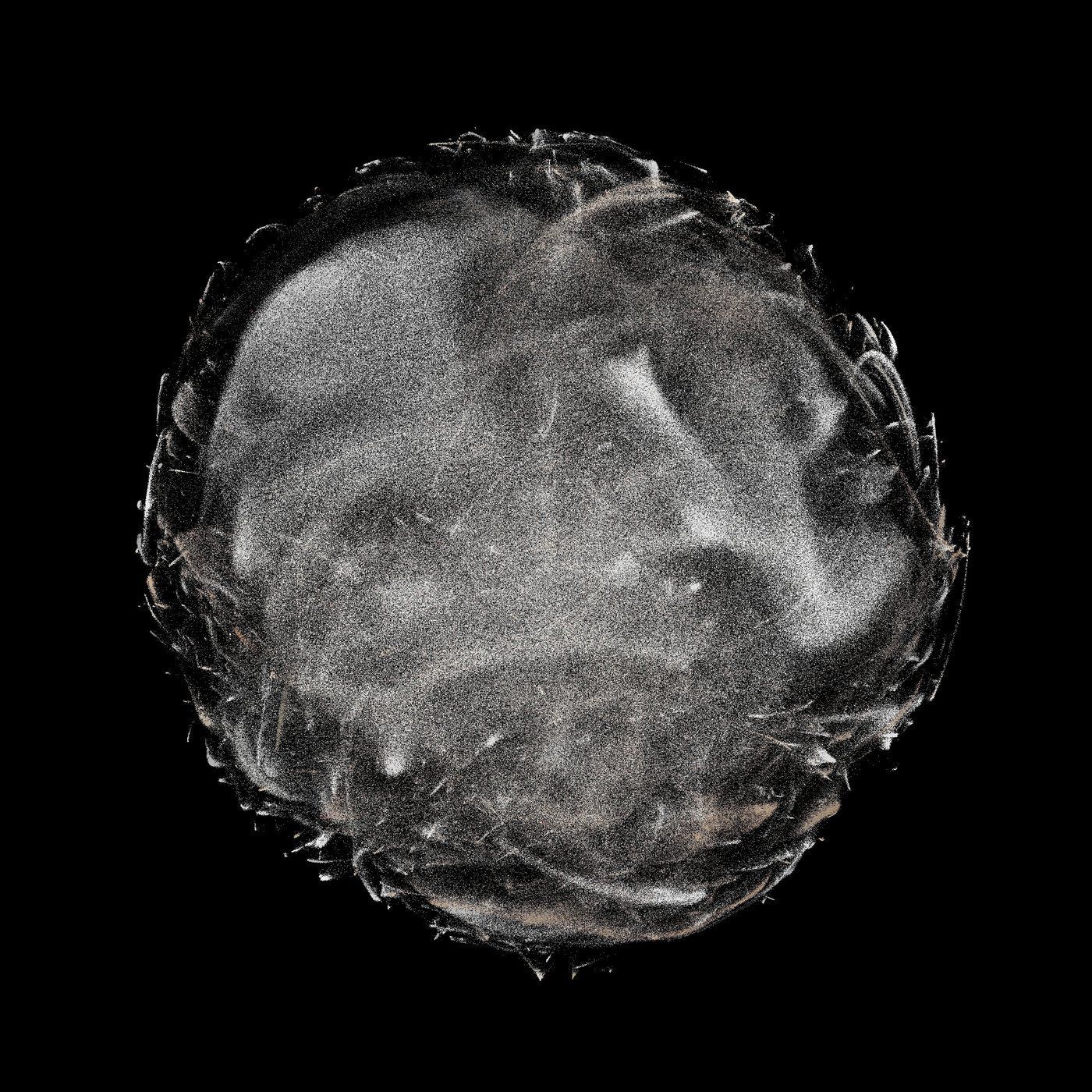

EONSphere #1 - "Mountains rise"

MP4, 3840x2160, Ed 13/13, 2021

MP4, 3840x2160, Ed 13/13, 2021. The EONSpheres are reprojection fragments from EONS (https://miketyka.com/?p=eons), a 2019 short animation film made by @mtyka, created entirely using neural networks. Our presence on this planet has been but a tiny moment in the eons of geological time. And yet, despite all our accumulated scientific knowledge, we are incapable of truly understanding the vastness of this history and our insignificance in it. #TheFEN

EONSphere #2 "Our Brief Moment"

MP4, 3840x2160, Ed 1/1, 2021

MP4, 3840x2160, Ed 13/13, 2021. The EONSpheres are reprojection fragments from EONS (https://miketyka.com/?p=eons), a 2019 short animation film made by @mtyka, created entirely using neural networks. Our presence on this planet has been but a tiny moment in the eons of geological time. And yet, despite all our accumulated scientific knowledge, we are incapable of truly understanding the vastness of this history and our insignificance in it. #TheFEN

EONSphere #3 - "The decay"

MP4, 3840x2160, Ed 7/7, 2021

The EONSpheres are reprojection fragments from EONS (https://miketyka.com/?p=eons), a 2019 short animation film made by @mtyka, created entirely using neural networks. Our presence on this planet has been but a tiny moment in the eons of geological time. And yet, despite all our accumulated scientific knowledge, we are incapable of truly understanding the vastness of this history and our insignificance in it. #TheFEN

EONSphere #4 - "Return to the sea"

MP4, 3840x2160, Ed 41/41, 2021

The EONSpheres are reprojection fragments from EONS (https://miketyka.com/?p=eons), a 2019 short animation film made by @mtyka, created entirely using neural networks. Our presence on this planet has been but a tiny moment in the eons of geological time. And yet, despite all our accumulated scientific knowledge, we are incapable of truly understanding the vastness of this history and our insignificance in it. #TheFEN

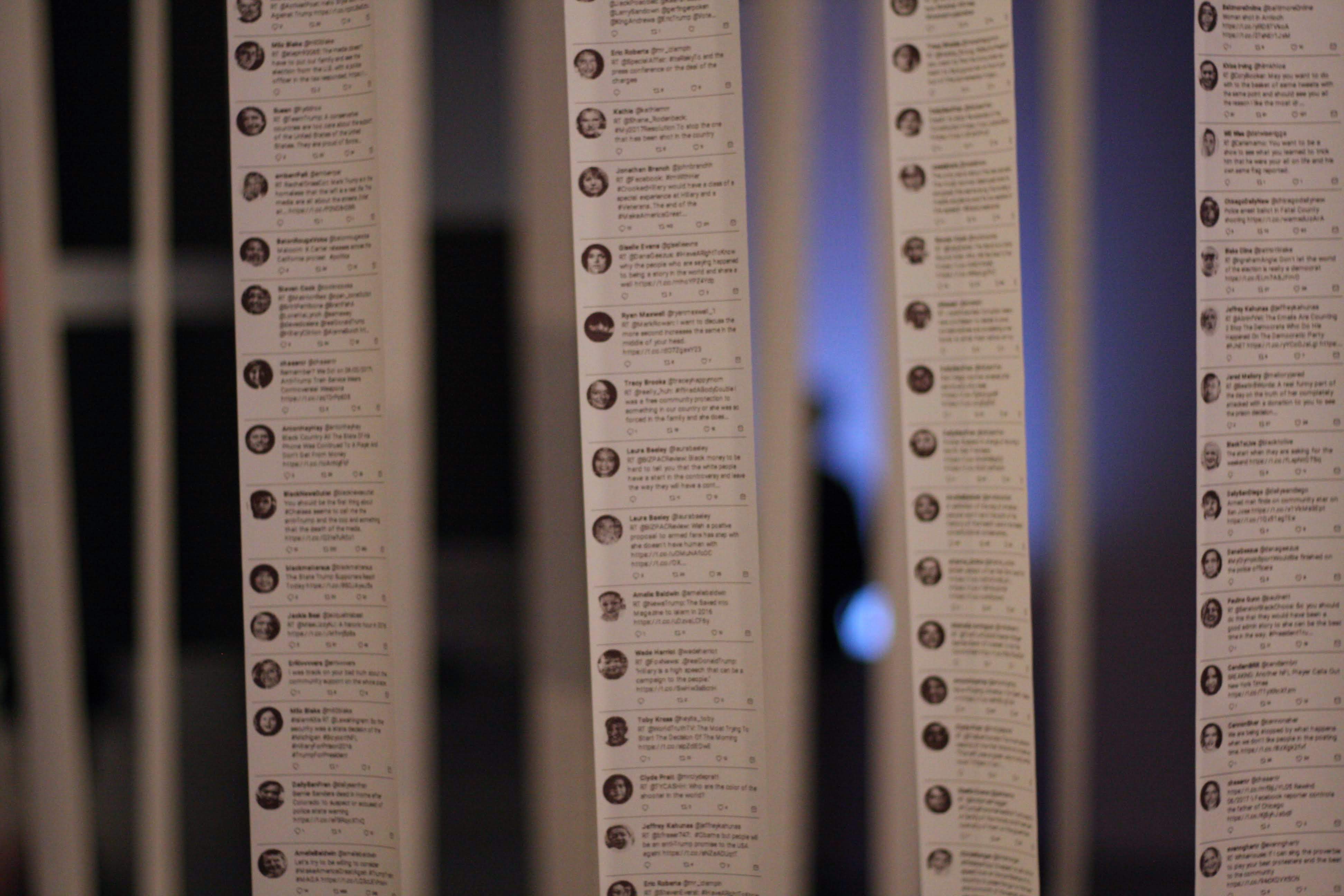

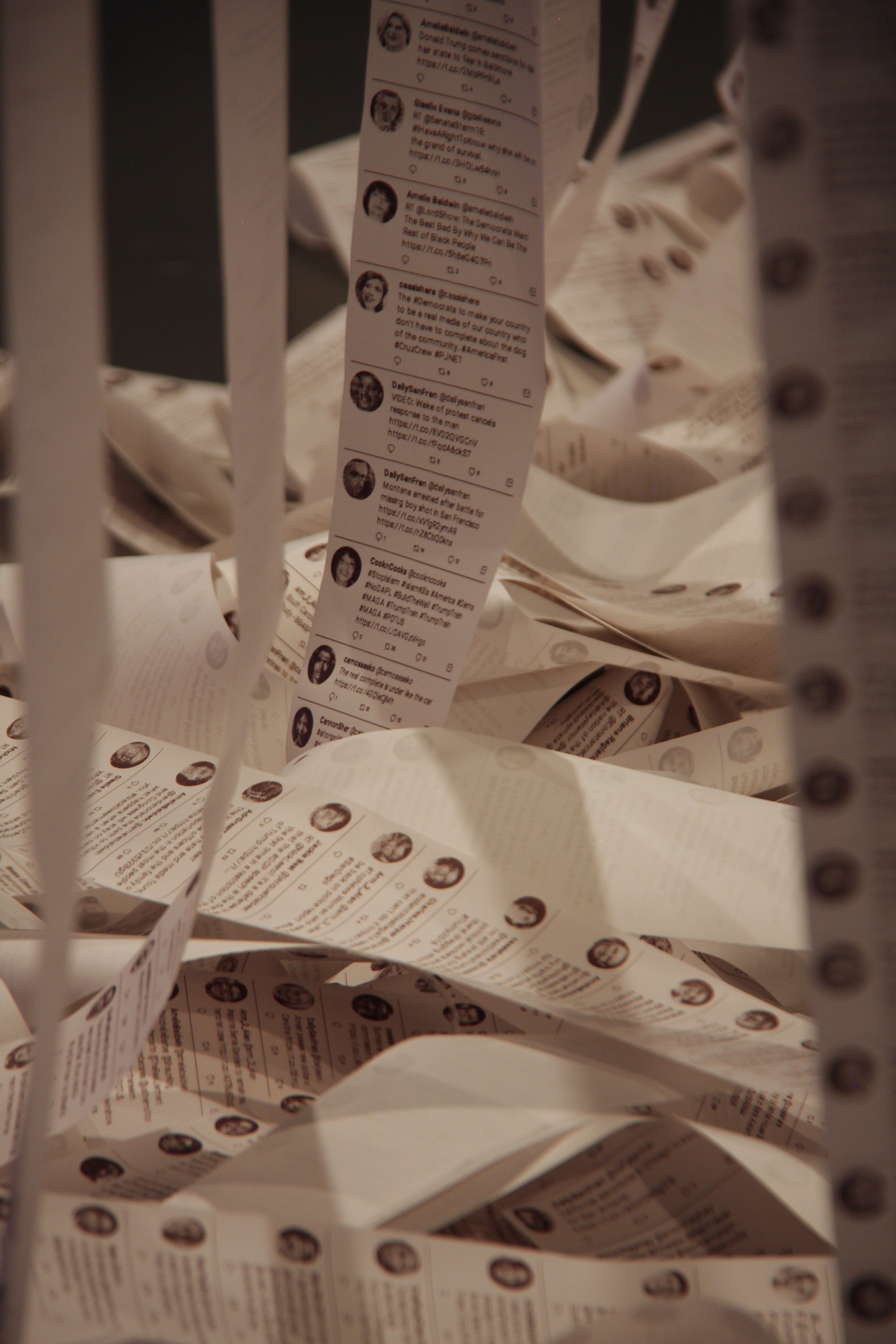

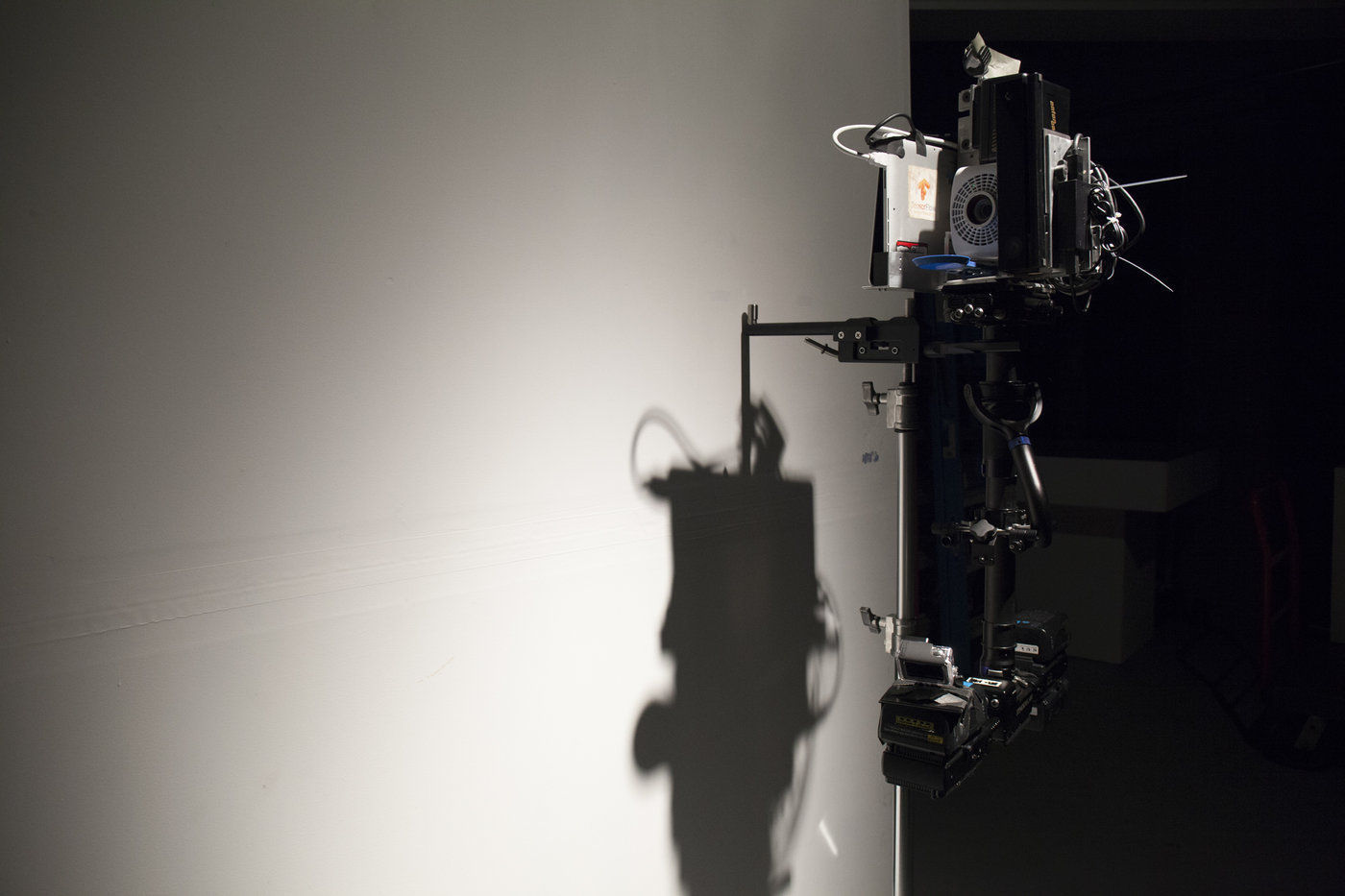

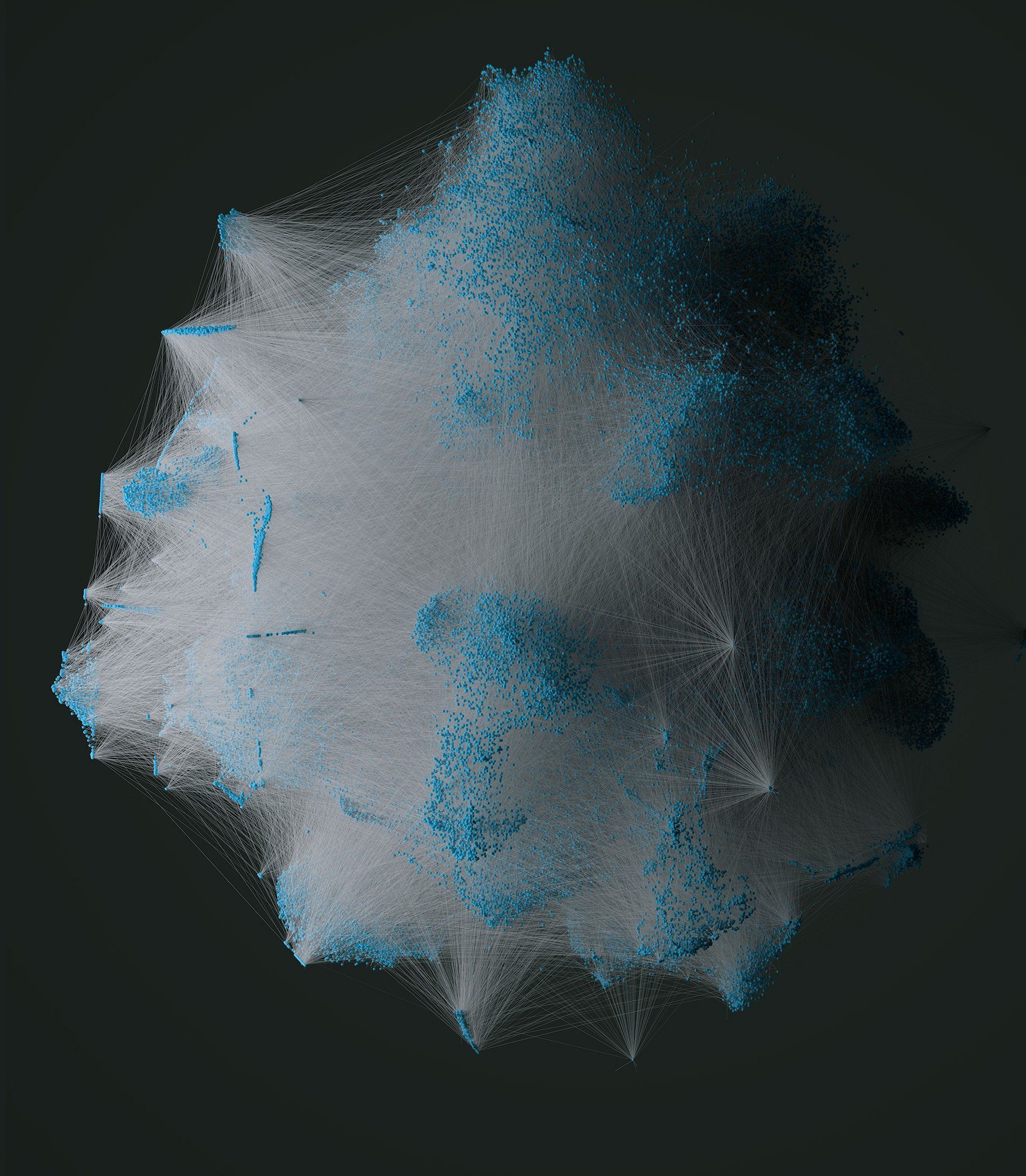

Us and Them

Kinetic Installation

2018, Commissioned by Seoul Museum of Art

2019, Mori Art Museum, Tokyo

"Us and Them" is a multi-modal installation that combines the earlier

"Portraits of Imaginary People" work with neural-net text generation and kinetic sculpture. Trained on a recently released set of two hundred thousand tweets from accounts identified as bots after the 2016 US presidential election and consequently evicted from Twitter, this piece features 20 machine-learning-driven printers which endlessly spew AI-generated political tweets by imaginary, generated people. The descending curtain of printer paper creates a central space with two chairs, inviting two people to sit, converse and connect, despite the torrent of machine-generated political propaganda that surrounds them.

The piece examines our new world which we created, a digital attention economy, in which we're constantly distracted, digitally connected and yet yearning for human connection. A fertile ground for political manipulation, propaganda is now fully automated and targeted, using machine learning to analyse its targets while pretending to be human. Synthetic media, also thorough modern computer vision technology and neural networks, blurs the boundary between what is a real and what is not. Doublespeak, such as the term "fake news" is further used to undermine what we know is real while pushing intentionally misleading information on an unprecedented scale.

"Us and Them" invites the viewer to reexamine their relationship with the machine we live inside and to seek true connection with one another.

The 20 thermal receipt printers are live and continuously printing AI generated tweets at a slow but steady pace, letting this sculpture evolve and change every day. Ultimately the torrent overwhelm and bury the central space and chairs.

The Consequences of Algorithmically Falsifiable Voice and Video for a Democratic Society

In 2017, the Justice Department charged thirteen individuals with interfering in American electoral and political processes. They had worked for the "Internet Research Agency," a well-funded Russian troll "factory" based in Saint Petersburg, which employs over four hundred people. Between 2015 and 2017, the agency is said to have created millions of tweets purporting to come from real people in America; their actions had spread and amplified both left and right wing conspiracy theories with the aim of sowing division. Other social media accounts created with the same aim had focused on fear mongering; some had even masqueraded as local news sources.

In 2018, NBC and FiveThirtyEight published large datasets of tweets from such accounts, many of which had been evicted from Twitter following the 2016 US presidential election. This archive gave an interesting insight into the tactics and content of an operation coordinated by a diffuse network of manipulators whose goal was to influence the narrative, the attention, and most likely the outcome of a national election.

Similar emerging tactics with various aims have popped up all over the world. In December 2018, the New York Times reported that for a period of time, half of YouTube traffic was "bots masquerading as people." Similarly, Facebook officially reports that up to 40 percent of its video engagement is bot-driven, though a recent suit filed by small advertisers asserts the figure is closer to 80 percent. The practice has become so common that bootstrapping marketing campaigns often involves manipulating engagement statistics. There are now companies that sell

engagement such as likes, comments, and shares, which are fulfilled by "click farms" or bots. Today, one can buy five thousand YouTube views for as low as fifteen dollars.

Media platforms have of course pushed back against fake engagement, building algorithms to detect non-human traffic. They use machine learning to detect subtle patterns in behavior, such as mouse movements and time between clicks. If the patterns don't match those of typical humans, the clicks are rejected in the aggregate data. Naturally, fraudsters fight back, often with the same techniques used to detect them and simulate natural behavior to trick the filters. The two sides are now locked in an arms race. Just a few years ago, captchas, quick challenges only humans could solve, were used to help identify real humans from fake ones. Unfortunately, recent improvements in machine learning make it now increasingly harder to erect such barriers, because the gap in ability between man and machine is closing rapidly.

Another recent phenomenon capacitated through machine learning is porn with artificial faces, which often targets celebrities, created through the so-called deep-fake technique. The algorithm learns to perfectly map all expressions and view-angles of a given face, and "transplants" them to another allowing for a virtual face graft; the technology is very similar to the one I used to create the artwork in this project. This technique has rapidly proliferated on Reddit and porn sites; so much so, that in September 2018, Google added what it terms involuntary synthetic pornographic imagery to its ban list.

The use of this technique is not limited to celebrity pornography or revenge porn. In early 2018, for example, a video circulated on social media sites purporting to show Emma González, a pro-gun legislation activist, ripping up the US Constitution. The vide, fabricated using the deep-fake technique to create an impression of her being a traitor to the American ideals, did not aim to convince anyone across the political aisle, as much as to rile up those who already believe their Second Amendment rights are under attack. This shows that the use of this technique for political manipulation helps to polarize the population. What more, our general distrust in the media is eroding our trust in any objective truth, we become accustomed to question everything; if something, be it true or false, doesn't agree with our existing views and opinions, we discard it as fictitious. This effect is called the liar's dividend, in which a skilled manipulator can dismiss truth as false. For example, at a rally in July 2018, the president of the United States accused the mainstream media of propagating fake news, and exclaimed, "Just remember, what you're seeing and what you're reading is not what's happening."

The trend of faking individuals on a large scale in the media is having a profound influence on our collective beliefs, whether we lean towards the left or the right of the political spectrum. While the particular operation aimed at the 2016 US presidential election is often perceived to have tried to energize the Right in particular, a detailed analysis of the content of these tweets, content published by NBC and FiveThirtyEight, reveals that a significant fraction of the trolls aimed to provoke the Left into a frenzy by spreading fear and uncertainty. Overall, though, the goal of this coordinated activity was to create a more polarized society, or rather a significant Us vs Them mentality we are now all familiar with in public discourse. Note that the manipulation of the 2016 US electoral process was largely driven manually. By 2020, however, the technology for synthetic writing will have likely reached a level of believability such that similar tactics will be automatable, and will enable a very small number of people to orchestrate a very large and diffuse electoral process. In fact, in early 2018, OpenAI published a new algorithm for the generation of text, which the authors believed to be so realistic that OpenAI decided not to openly publish the model at all, for fear of misuse in the ways described earlier.

What struck me when observing all these present-day trends and novel techniques was the deception of identity at their very core. Historically, humans have always been able to easily verify someone's existence by interacting with them face to face. Nowadays, because of the disjoined way that we experience others' accounts on social media, especially public social media like Twitter, these fake accounts don't have to be particularly sophisticated to appear real. A simple avatar and an apparent location in the United States suffice. Unlike in a top-down

approach of classic propaganda, here people are conned into believing that these fake identities are in fact true individuals who are "like them" and who espouse certain views.

Prior to global social media, it was impossible for a foreign state to effectively access Americans in the United States; top-down information distribution channels such as newspapers and television are usually well under the influence of the government. It would have been moreover cost prohibitive for a foreign nation to send tens of thousands of spies to pretend to be normal people, to make friends with the locals, and then to start subtly changing the conversation. Today, however, social media make it an easy proposition by opening up a new way for bottom-up manipulation, which can be orchestrated from abroad, and which has already proven itself to be remarkably effective at sowing division and distrust of one's own country. The term fake news deliberately obscures the fact that the disinformation is spread with very specific intents. What we have here is not simply fake news, but rather a new form of coordinated propaganda.

The idea of imaginary people, central to the bottom-up approach, inspired me to explore this space artistically. Any collective belief, be it religious, economic, or political, can effectively become "real" if that fictitious entity affects the actions of the collective. In that sense, these imaginary people who express their views on social media really exist in the same way that deities do, as long as a group believes in their existence and reality. I was therefore curious to speculate who these fictitious humans might be or what they might look like, which became the inspiration for algorithmically generating faces to create portraits of imaginary people.

In recent years, computer graphics algorithms, especially generative neural networks, have become rather proficient at producing and manipulating media such as image, video, and sound, to a degree that has become hard to distinguish from their real counterparts. WaveNet, an algorithm that models raw audio waveforms, is capable of producing extremely realistic human speech. The DeepDream algorithm, released in 2015, showed that a computer system that is good at perceiving image data can also be inverted to generate images. The Generative Adversarial Network (GAN), a machine-learning algorithm published in 2016, has been refined to specifically generate new images based on a set of training images.

While tweets from 2016 predate these techniques, the appearance of such technologies gives us a taste of what's to come. As discussed earlier, fake identities and their subsequent opinions, also fabricated , were created and spread in 2016 by a small group of dedicated human beings; nowadays, however, we can expect this sort of work to become rapidly automated. Likewise, those who try to separate wheat from chaff are also increasingly turning to sophisticated machine-learning algorithms to find and filter inaccurate or fake content. We thus have now an arms race which is already affecting the nature of the internet, how we build our beliefs of the world around us, how we delegate trust in society, and what we base our own actions on.

Feb 2019, Mike Tyka

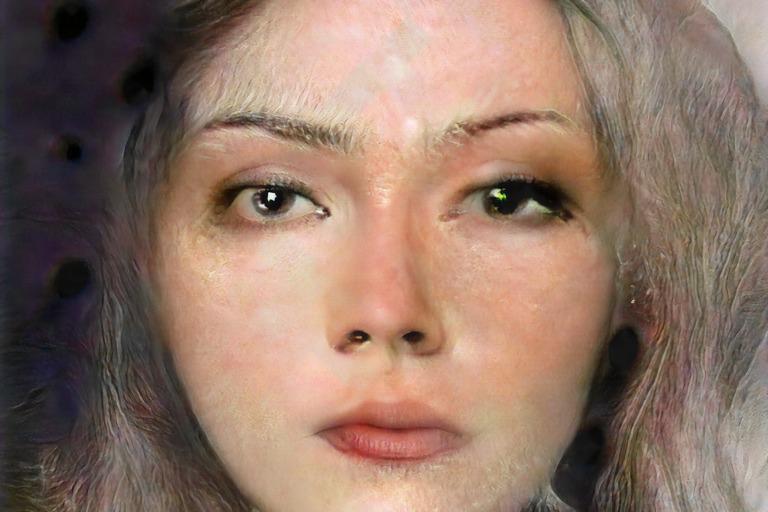

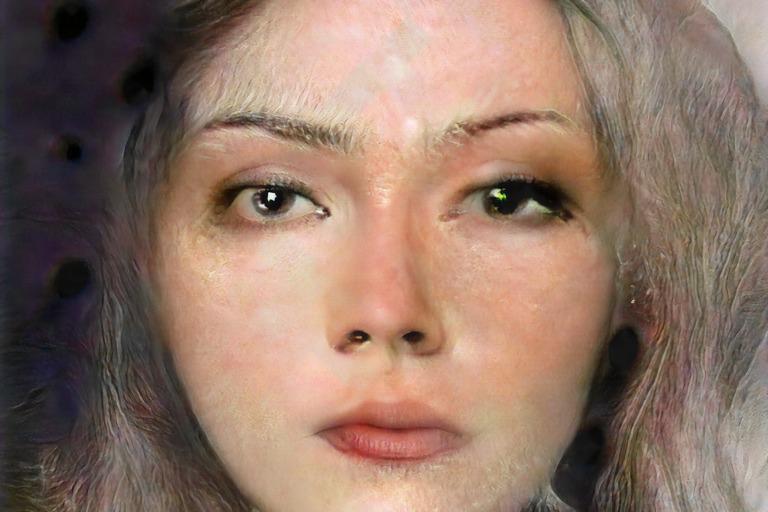

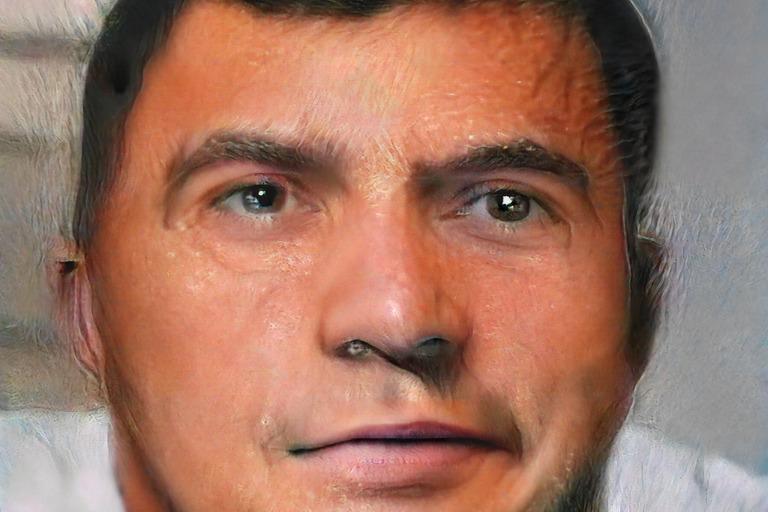

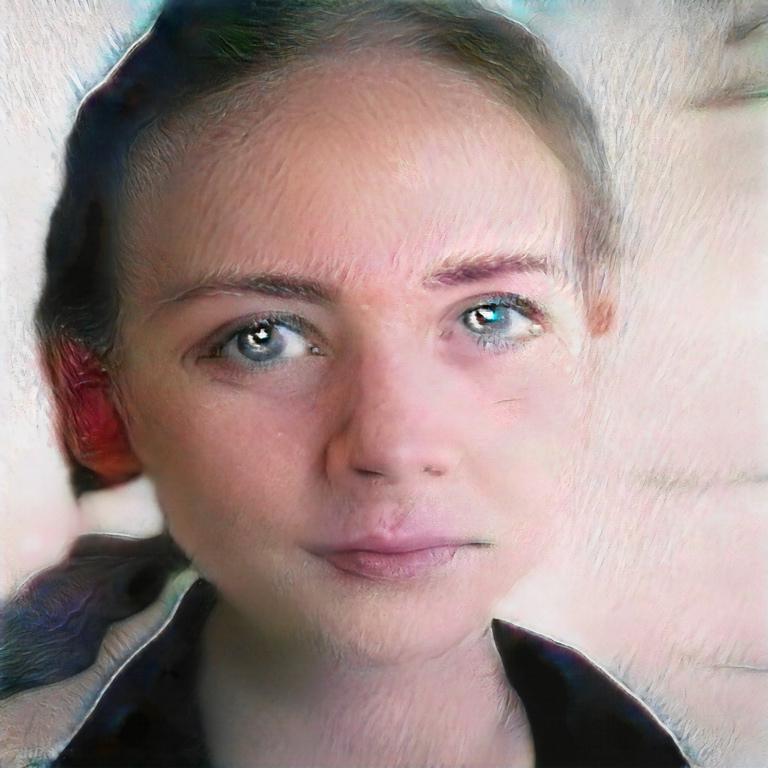

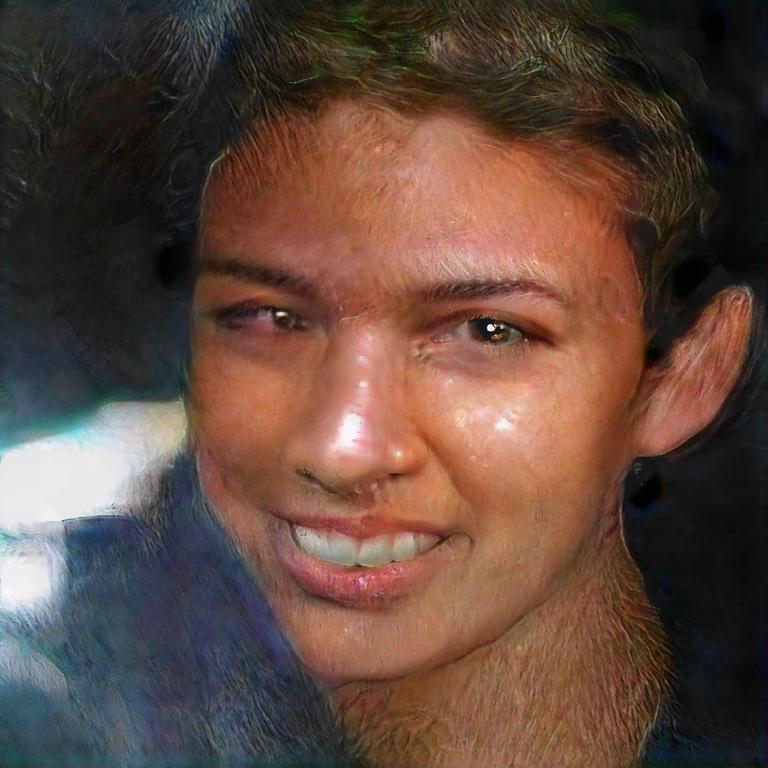

I see you

Archival print, 20"x20", Edition of 2

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

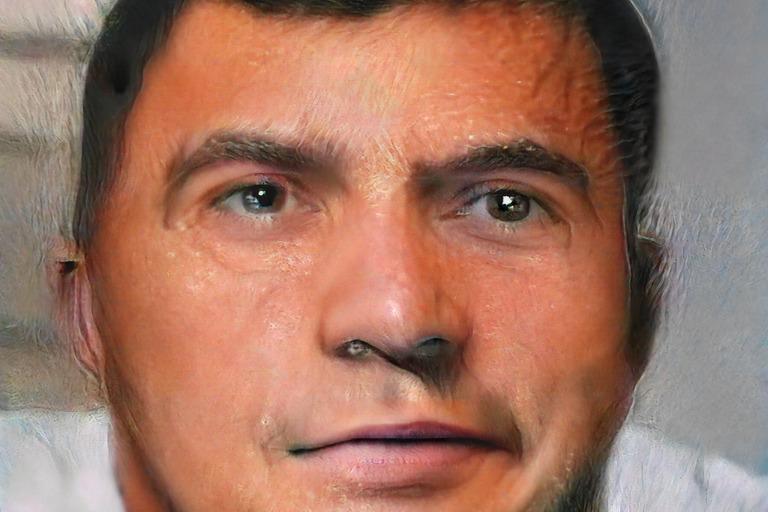

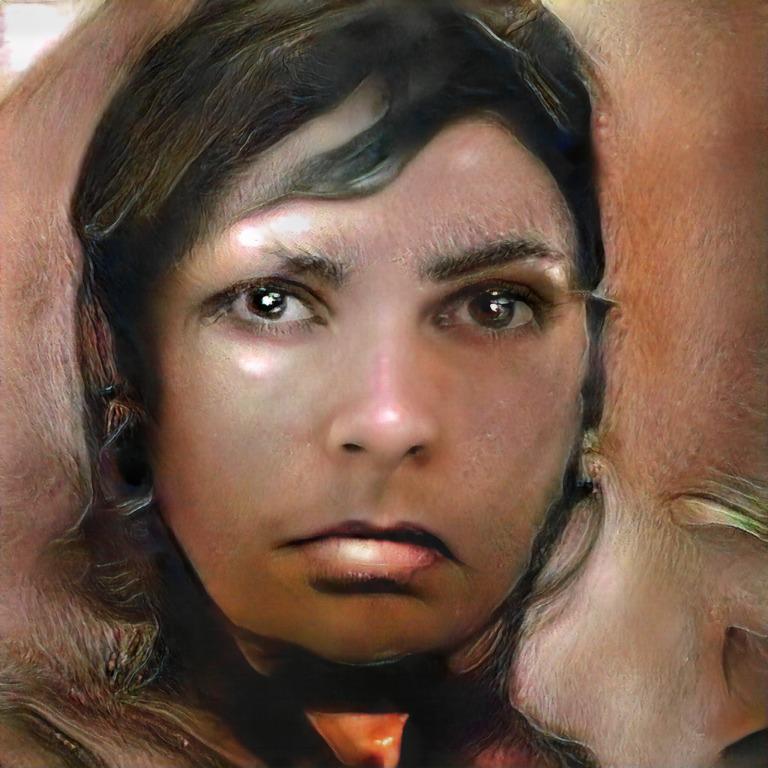

A fleeting memory

Archival print, 20"x20", Edition of 2

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

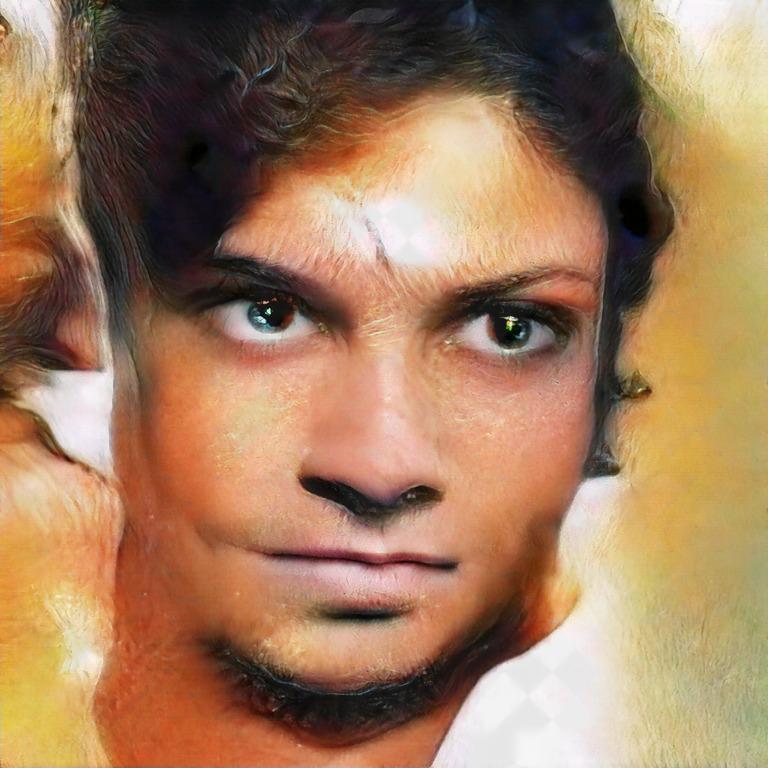

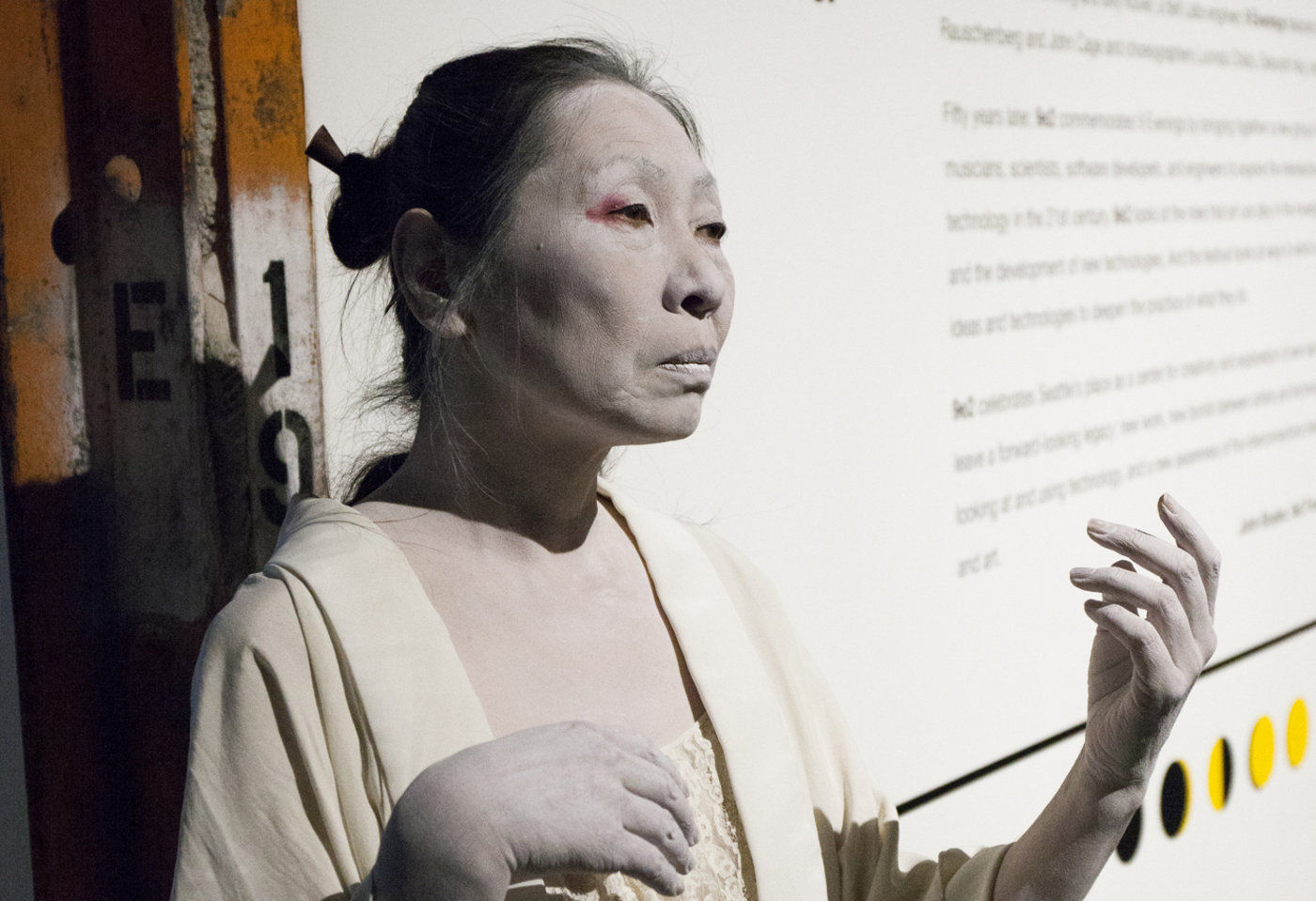

Blinovaexininta

NFT only, Edition of 25

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

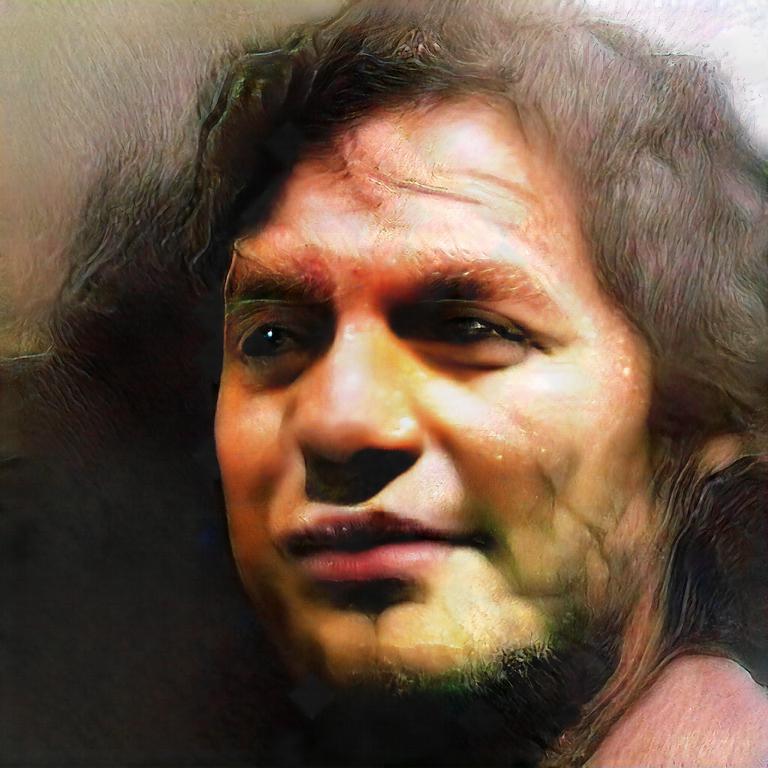

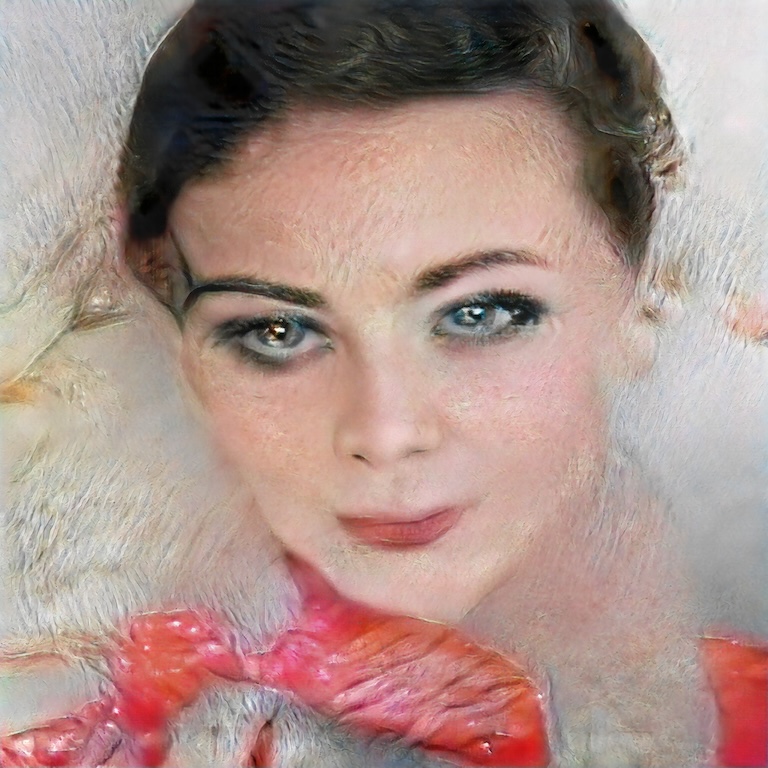

Elizabeth_Pr22

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

evegreene88

NFT only, Edition of 5

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

finley1589

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

Gabrielitaa7x

NFT only, Edition of 3

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

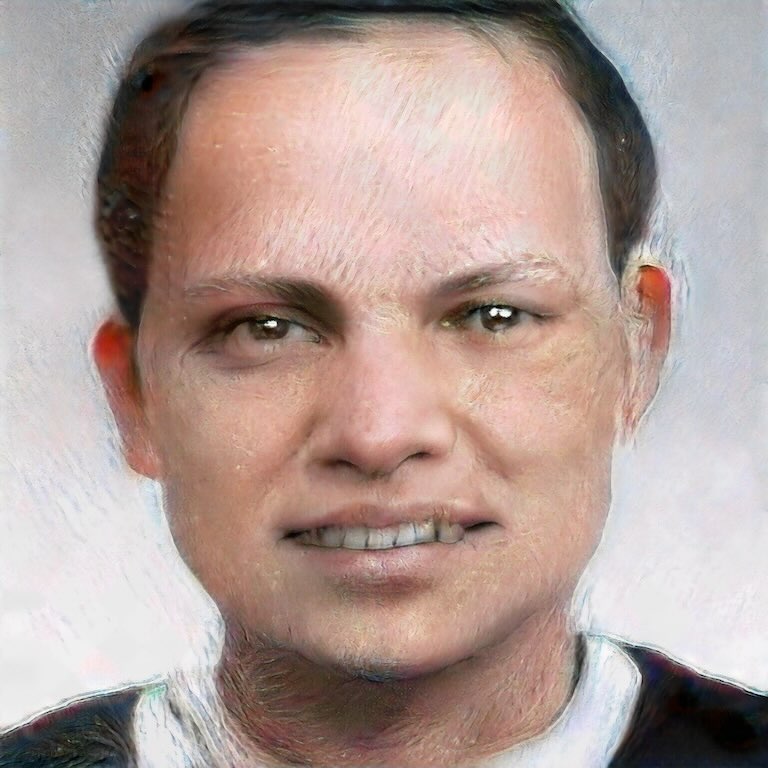

hamidmansoor123

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

HawaiianAnn

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

Jaclyn_Donahue_

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

JoshuaSpence88

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

khaledbakri7

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

komarova6969

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

maksimkovalev15

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

mihailkuznetzo1

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

VittoreGuidi

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

Pamela_Sharky13

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

EveWebster373

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

Sarah_Giaco

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

TinaTkurtz1222

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

whoareyou765

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

DaDanielDaDa

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

DmitriBelov12

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

Kaliyah_Rrs

NFT only, Edition of 1

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

MelissaYesIam

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

Uliabelushiva

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

chalizzTrT

NFT only, Edition of 3

2017

![]()

The series, titled "Portraits of Imaginary People" explores the latent space of human faces by training a neural network to imagine and then depict portraits of people who don’t exist.

To do so, many thousands of photographs of faces taken from Flickr are fed to a type of machine-learning program called a Generative Adversarial Network (GAN). GANs work by using two neural networks that play an adversarial game: one (the "Generator") tries to generate increasingly convincing output, while a second (the "Discriminator") tries to learn to distinguish real photos from the artificially generated ones.

At first, both networks are poor at their respective tasks. But as the Discriminator network starts to learn to predict fake from real, it keeps the Generator on its toes, pushing it to generate harder and more convincing examples. In order to keep up, the Generator gets better and better, and the Discriminator correspondingly has to improve its response. With time, the images generated become increasingly realistic, as both adversaries try to outwit each other. The images you see here are thus a result of the rules and internal correlations the neural networks learned from the training images.

Dopamine

Plaster of Paris, Mirror, Dollar bill

2017

Harvesting the Sap

Cast lead glass, machined bronze

2018

Groovik’s Cube

2009-2014

Burning Man & Libery Science Center, NJ

Groovik’s Cube is a fully playable, 35ft-high sculpture inspired by the classic puzzle, Rubik’s Cube. It was built by Mike Tyka, Barry Brumitt and a team of artists and engineers from Seattle in 2009. It is, to our knowledge, the largest functional Rubik’s Cube structure in the world. Groovik’s Cube is controlled from 3 control stations that surround the main structure - each player is able to rotate only one axis, creating an entirely new, collaborative puzzle solving experience.

Groovik’s Cube offers a unique new playing mode where three players must collaborate to solve the classic Rubik’s cube puzzle. The cube is controlled via three touch screen interfaces located around the cube, with each interface capable of rotating only one axis of the cube - no single player can solve the cube alone. This innovative twist adds a completely new dimension to the game and turns the classic puzzle into a social game and a fascinating social spectacle.

Groovik’s Cube is built from a lightweight aluminum frame, covered in fabric, and illuminated from the inside by 2 kilowatts of high-power LEDs. It simulates the motion of an ordinary Rubik’s Cube by animating the rotations on the 54 "pixels" that comprise the cube. The total weight of the structure is around 2000 lbs and is designed such that participants may walk safely under the structure. The thin supports are virtually invisible from a distance, creating the magical illusion of a floating cube.

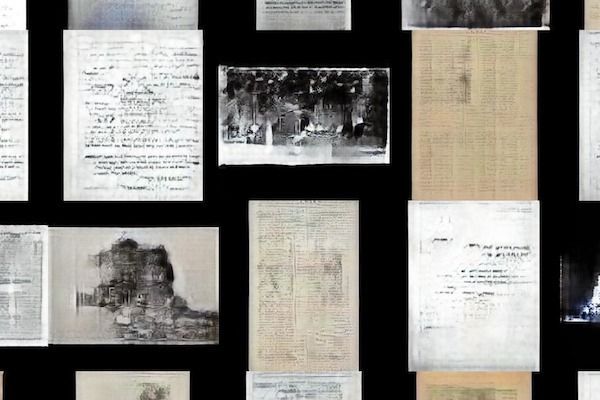

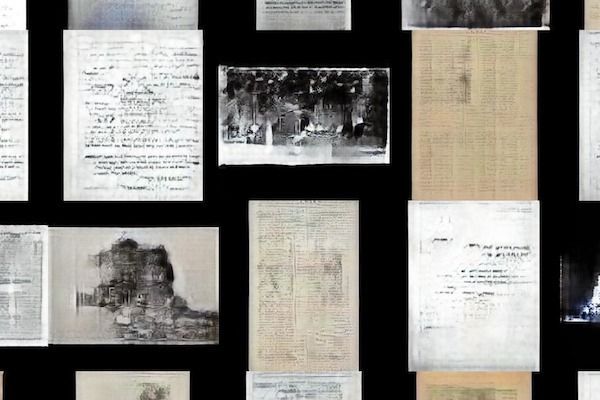

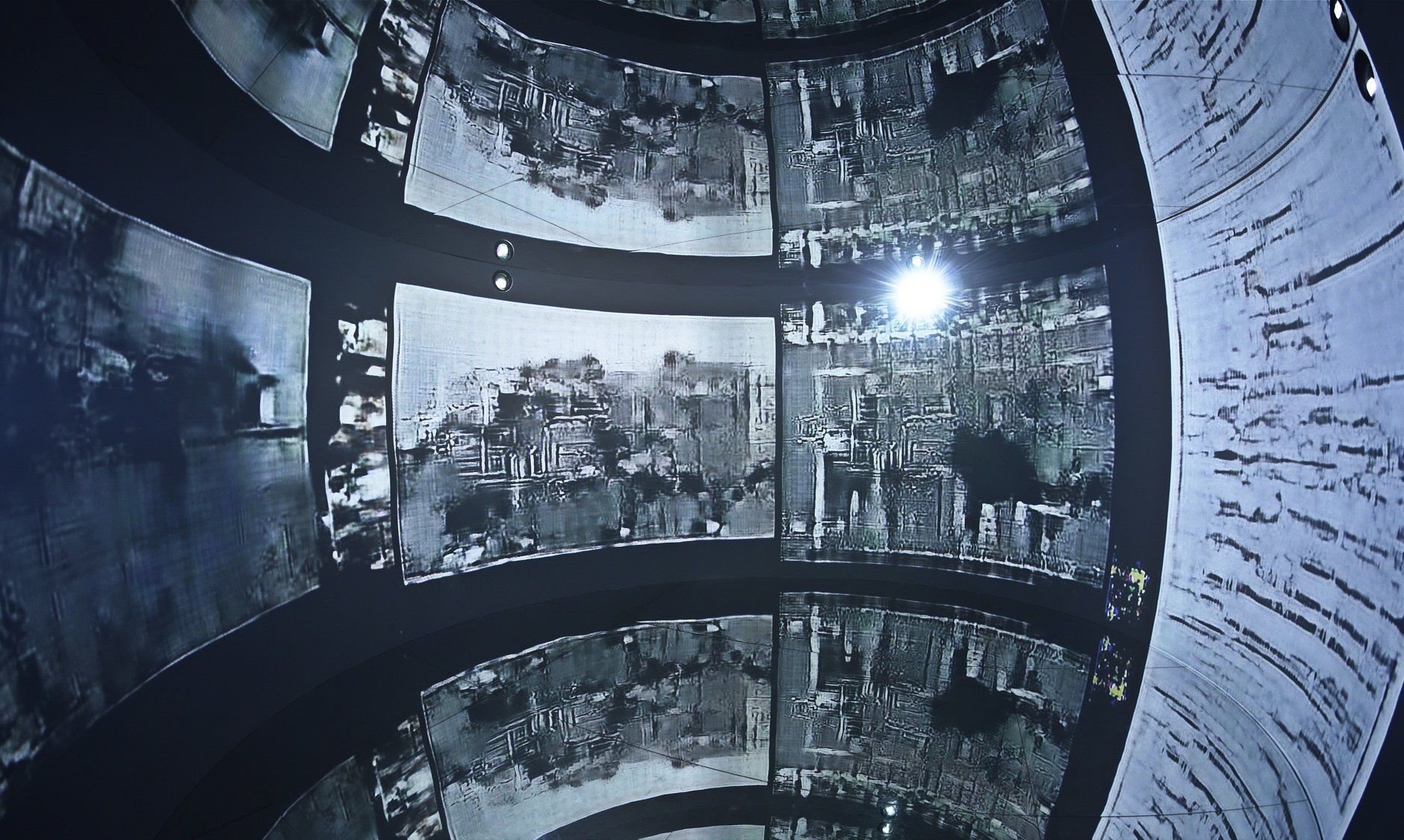

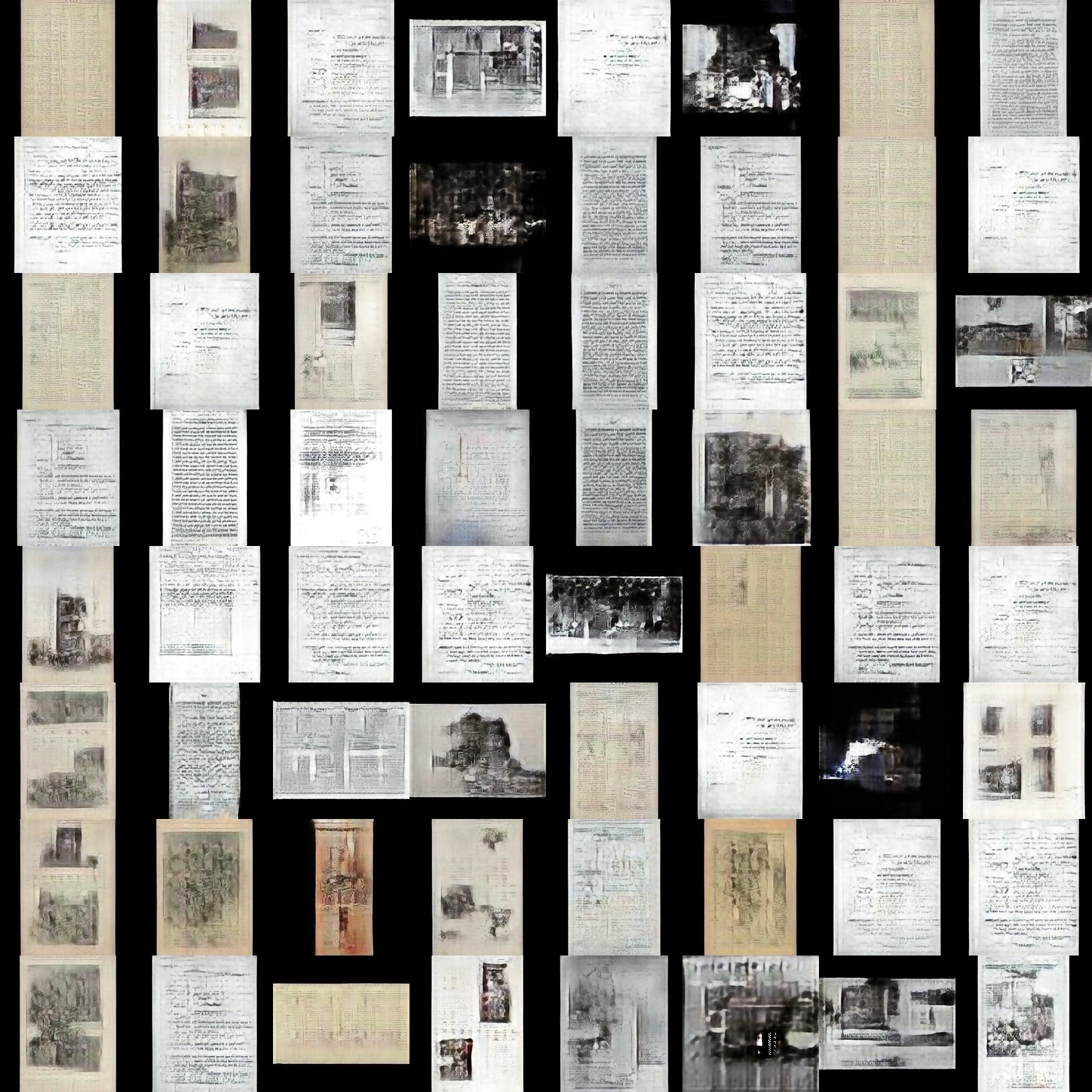

Archive Dreaming

2017

Archive Dreaming is a collaboration with Refik Anadol, who was commissioned to work with SALT Research collections an archive of 1,700,000 historical documents. Shortly after receiving the commission, Anadol was a resident artist for Google's Artists and Machine Intelligence Program where he closely collaborated with Mike Tyka and explored cutting-edge developments in the field of machine intelligence in an environment that brings together artists and engineers. Developed during this residency, his intervention Archive Dreaming transforms the gallery space on floor -1 at SALT Galata into an all-encompassing environment that intertwines history with the contemporary, and challenges immutable concepts of the archive, while destabilizing archive-related questions with machine learning algorithms.

In this project, a temporary immersive architectural space is created as a canvas with light and data applied as materials. This radical effort to deconstruct the framework of an illusory space will transgress the normal boundaries of the viewing experience of a library and the conventional flat cinema projection screen, into a three dimensional kinetic and architectonic space of an archive visualized with machine learning algorithms. By training a neural network with images of 1,700,000 documents at SALT Research the main idea is to create an immersive installation with architectural intelligence to reframe memory, history and culture in museum perception for 21st century through the lens of machine intelligence.

Archive Dreaming is one of the first ever large-scale art installations to use "Generative Adversarial Networks" to "dream" imaginary representations based on a large corpus of images.

Mike Tyka studied Biochemistry and Biotechnology at the University of Bristol. He obtained his PhD in Biophysics in 2007 and went on to work as a research fellow at the University of Washington to study the structure and dynamics of protein molecules. In particular, he has been interested in protein folding and has been writing computer simulation software to better understand this fascinating process. Mike joined Google in 2012 and worked on creating a neuron-level map of fly and mouse brain tissue using computer vision and machine learning.

Mike became involved in creating sculpture and art in 2009 when he helped design and construct Groovik's Cube, a 35ft tall, functional, multi-player Rubik's cube installed in Reno, Seattle and New York. Since then his artistic work has focused both on traditional sculpture and modern technology, such as 3D printing and artificial neural networks.

His sculptures of protein molecules use cast glass and bronze and are based on the exact molecular coordinates of each respective biomolecule. They explore the hidden beauty of these amazing nanomachines, and have been shown around the world from Seattle to Japan.

Mike also works with artificial neural networks as an artistic medium and tool. In 2015 created some of the first large-scale artworks using Iterative DeepDream and co-founded the Artists and Machine Intelligence program at Google. In 2017 he collaborated with Refik Anadol to create a pioneering immersive projection installation using Generative Adversarial Networks called "Archive Dreaming". His latest generative series "Portraits of Imaginary People" has been shown at ARS Electronica in Linz, Christie's in New York and at the New Museum in Karuizawa (Japan). His kinetic, AI-driven sculpture "Us and Them" was featured at the 2018 Mediacity Biennale at the Seoul Museum of Art and in 2019 at the Mori Art Museum in Tokyo.

For more information or availablility of the pieces contact him at:

Email: mike.tyka@gmail.com

Art Exhibitions/Installations

- 2019 Future and the Arts: AI, Robotics, Cities, Life, Mori Museum of Art, Us and Them

- 2018 BAM! Glasstastic, Bellevue Arts Museum, Seattle, "Harvesting the Sap"

- 2018 Mediacity Biennale, Seoul Museum of Art, Seoul, "Us and Them"

- 2017 OIST, Okinawa, "Art and AI Aethetics" exhibition, Three Deep Dreams

- 2017 Karuizawa New Museum, Karuizawa, "Art is Science II"

- 2017 ARS Electronica, Portraits of Imaginary People

- 2017 OutOfSight, Group Show: Portraits of Imaginary People

- 2017 SALT Institute, Istanbul, Archive dreaming (with Refik Anadol)

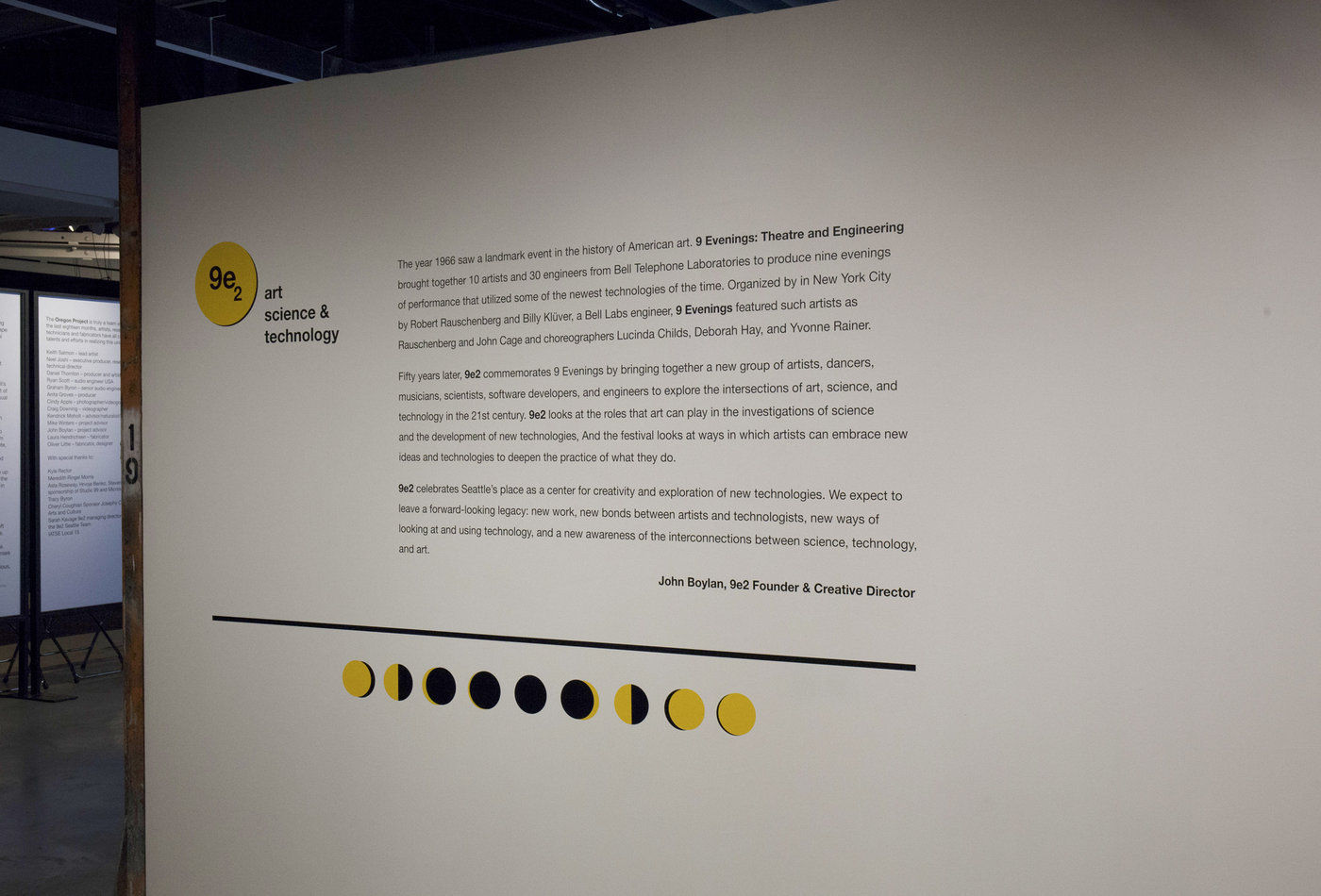

- 2016 9evenings2, Seattle, Group Show, Molecular sculpture

- 2016 9evenings2, Seattle, Live Performance "Butoh vs DeepDream"

- 2015 Grey Area Art Foundation, San Francisco, "The art of neural networks"

- 2014-2016 Fred Hutch Cancer Institute. Semi-permanent installation "Savior" and "Portal"

- 2013 Liberty Science Center, New Jersey, "Groovik's Cube III"

- 2011 Pacific Science Center, Seattle, "Groovik's Cube II"

- 2009 Black Rock City, Nevada, "Groovik's Cube"

Invited Talks

- 2019, Tokyo, Innovative City Forum 2019 - Future and the Arts Session

- 2017 OIST, Okohama, "Art and AI Aethetics" symposium

- 2017 Tokyo, Digital Hollywood University

- 2016 Alt-Ai Conference, New York

- 2016 Digi.Logue, Istanbul

- 2016 Google SPAN Tokyo

- 2016 Magenta Conference

- 2016 Google Cultural Institute Summit, Paris

- 2016 Research at Google conference

- 2016 UC Berkley Center for New Media

- 2015 TEDx Munich

- 2012 Google IO, Invited speaker for Ignite talk.

- 2011 SciFoo, Mountain View, Invited speaker

- 2010 FooCamp, O'Reilly Media. Invited speaker for Ignite talk.

- 2007 CCPB Biomolecular Simulation

The machinery of life, an inevitably complex system, must constantly defend itself from intrusion and subversion by other agents inhabiting the biosphere. Ever more intricate systems for the detection and thwarting of intruding foreign life forms have evolved over the eons, culminating in adaptive immunity with one of its centerpieces: The Antibody. Also known as Immunoglobulin, this pronged, Y-shaped protein structure is capable of binding, blocking and neutralizing foreign objects such as as bacteria or viruses.

The two tips of the Y have special patches which can tightly recognize and bind a target. Our body generates astronomical numbers of variants, each recognizing a different shape. The variety is so great that completely alien molecules can be recognized even though the body has never encountered them before. Once bound, the antibody blocks the function of the foreign object by physically occluding its functional parts. The Antibody sacrifices itself in the process but not before signalling to the immune system to make more of its specific variant form. After the intruder in question has been fought off, memory cells remain in the bloodstream that can quickly be reactivated should reinfection occur to produce more of the successful variant Antibody.

The machinery of life, an inevitably complex system, must constantly defend itself from intrusion and subversion by other agents inhabiting the biosphere. Ever more intricate systems for the detection and thwarting of intruding foreign life forms have evolved over the eons, culminating in adaptive immunity with one of its centerpieces: The Antibody. Also known as Immunoglobulin, this pronged, Y-shaped protein structure is capable of binding, blocking and neutralizing foreign objects such as as bacteria or viruses.

The two tips of the Y have special patches which can tightly recognize and bind a target. Our body generates astronomical numbers of variants, each recognizing a different shape. The variety is so great that completely alien molecules can be recognized even though the body has never encountered them before. Once bound, the antibody blocks the function of the foreign object by physically occluding its functional parts. The Antibody sacrifices itself in the process but not before signalling to the immune system to make more of its specific variant form. After the intruder in question has been fought off, memory cells remain in the bloodstream that can quickly be reactivated should reinfection occur to produce more of the successful variant Antibody.

_med.jpg)

_med.jpg)

_med.jpg)

_med.jpg)

_med.jpg)

_med.jpg)

_med.jpg)

_med.jpg)

_med.jpg)

_med.jpg)